Background

For the uninitiated, an FPGA is a field-programmable array of logic that is typically used to perform or accelerate some specific function (or functions) within a computer system. They are typically paired with a separate traditional microprocessor (or as part of a combined system-on-chip (SoC)) but can operate standalone as well.

They can be configured at startup, or at runtime (using a technique called ‘partial reconfiguration’). In terms of flexibility, they fall somewhere between ASICs (application-specific integrated circuits) which have a high engineering cost and cannot be modified once produced, and software running on a microprocessor which can be rapidly developed on the fly.

Our previous research publication on FPGAs noted:

“The most common uses of FPGAs are areas within automotive, medical, factory equipment and defence equipment that required rapid concurrent processing.”

This statement remains true. However, since then the use of FPGAs has expanded. The increased availability of low-cost development boards, open-source hardware and tooling, as well as the ability to rent a high specification FPGA in the cloud has considerably lowered the entry costs.

In recent years, we have noticed a trend amongst our clients using FPGAs in areas such as the Internet of Things (IoT), accelerated cloud services, machine learning and platform firmware resilience. We’ve also noted that FPGAs help to faciliate the development of significant open-source hardware projects such as RISC-V and OpenTitan.

It is possible in the near future that we will see further migration to FPGAs as the microchip supply shortage begins to bite, with some advocating FPGAs as a logical solution to the issue of chip shortages, however the shortage of substrates will still pose a problem for FPGA manufacturers.

In this blog post we will consider the following themes:

- New practical uses of FPGAs

- How the technology landscape has changed

- Recent FPGA security vulnerabilities and research

- Threats and threat modelling for FPGAs

Uses of FPGAs

First, let’s consider some of the more recent applications of FPGAs that we have observed.

Platform Firmware Resiliency

Both Intel and Lattice are using FPGAs to underpin their implementations of NIST’s Platform Firmware Resiliency Guidelines (SP800-193).

These guidelines provide a series of technical recommendations that go beyond the traditional definition of ‘root of trust’ to ensure that the lower-level components of a computer system (typically, the hardware and firmware components) can protect, detect and recover from attempts to make unauthorized changes to the systems.

Intel’s open-source implementation, targeted at their MAX10 device performs functions including validating board management controller (BMC) and platform controller hub (PCH) firmware, as well as monitoring the boot flows of these critical components to ensure they complete in a timely fashion. Lattice’s implementation isn’t open source but claims to have ‘demonstrated state machine-based algorithms that offer Nanosecond response time in detecting security breaches into the SPI memory.’

…as a service (aaS)

Offering something ‘as a service’ has become de rigueur in the world of cloud computing, and FPGAs are no exception here.

Some vendors offer access to high-specification FPGAs as a pay-as-you-use service, opening a product that previously would have had very high upfront costs, to a much wider market. Others use FPGAs to provide powerful acceleration for specific applications, without requiring developers to develop and deploy their own bitstreams.

Cloud vendors understandably look to maximize the usage of their hardware, and multi-tenanted environments are commonplace in cloud computing. Although FPGA usage currently remains primarily single tenancy (at least in terms of the FPGA itself), these instances are often on cards that are multi-tenanted on a single F1 instance (allowing, for example AWS to provide 1, 2 or 8 FPGAs per instance). it seems inevitable that before long, multi-tenancy systems will become common.

This will introduce new potential threats including remote voltage fault injection and side-channel attacks. As both vendors, and consumers of this type of technology – it will be important to understand the threat model involved when sharing low-level access to hardware to minimize these type of attacks.

Financial Technology

Gathering momentum since the early 2000s, FPGAs today play an increasing role in financial technology applications – on both sides of the trade, as traders and brokers engage in a race to create the fastest algorithms and trading platforms.

High-frequency-trading (HFT) firms (hedge funds, or prop traders) have migrated their trading algorithms from highly optimized C applications, through to FPGAs, and in some cases are now investigating migrating these designs to ASICs. On the exchange or market side, brokers use technology to provide low-latency streams of market data, as well as running low-latency, and deterministic risk checking platforms.

A recent article suggested that FPGAs are pretty much the starting point now for HFTs. And the shift in technology has been so profound that, “In 2011, a fast trading system could react in 5 microseconds. By 2019, a trader told me of a system that could react in 42 nanoseconds”.

Previously requiring a great deal of investment, Xilinx have launched an open-source algorithmic trading reference design, with the goal of democratizing this type of technology – using a plug and play framework.

Computing-Intensive Scientific Research

Many fields of modern scientific research – including in areas such as high-energy physics and computational biology – need to process and filter vast amounts of data. FPGAs are increasingly being used to provide the necessary processing and filtering required to make these data loads manageable.

An example of this is the Large Hadron Collider (LHC) at CERN. In various experiments, FPGAs are being used to help look for significant events in data at a rate in the order of hundreds of terabytes per second, and are used as a coarse filter before passing data off to other systems.

In medicine, The Munich Leukimia Lab (MLL) noted that since switching to FPGA-based genome sequencing “what used to take us 20 hours of compute time can now be achieved in only 3 hours”.

Artificial Intelligence (AI) and Machine Learning (ML)

Machine learning and artificial intelligence is a rapidly evolving area. It is impacting all areas of technology and business, including our own. Two years ago we carried out a long-term research project into the potential of using machine learning for web application testing, Project Ava, and we continue to research adversarial techniques in this space.

GPUs are currently leading in this space, having the advantage of a wealth of development behind them (and indeed, are well suited in some ways to the requirements of machine learning algorithms) with popular libraries such as TensorFlow supporting them out of the box, and CUDA providing an easy way to rapidly develop models for GPUs.

While the biggest technology companies are jumping to their own bespoke silicon to improve their AI capabilities; increasingly, FPGAs are discussed as possible alternatives to GPUs in the broader market. There are a few downsides to GPUs. They increasingly run at high temperatures, and can struggle when moved out of a temperature-controlled datacentre environment into real-world (sometimes referred to as edge) use. Their often substantial power consumption also makes them less suitable for IoT and other ‘smart’ applications.

The other substantial challenge with FPGAs is the development overhead – which currently doesn’t exist in the same mature state as for GPUs, As we shall discuss later however, high level synthesis languages and tools are facilitating this ease and speed of development now for FPGAs too. Vendors are creating dedicated silicon and bespoke tooling to help facilitate this transition.

The Changing FPGA Technology Landscape

Having considered recent applications of FPGA technology, let explore the current technology landscape including how the vendor ecosystem is changing, the rise of open-source and hobbyist FPGA development and how tooling is abstracting away designers from the underlying logic on the FPGAs.

The Vendor Ecosystem is Expanding

At first glance, the vendor landscape remains dominated by two major players Intel (who now own Altera) and Xilinx (who are in the process of being acquired by AMD). However, increasingly significant roles are being played by the smaller vendors (in no particular order) Lattice, Microchip (who bought Atmel in 2016 and Microsemi in 2018), QuickLogic and Achronix.

Although after acquiring Altera, Intel initially seemed to push towards aligning their FPGAs offering much more closely with their Xeon processor range, there seems to have been little development of this product line since 2018. Instead, Intel and Xilinx both seem to be pushing towards building more powerful SoC devices, using ARM cores. At the leading edge, Intel are pushing ahead with their eASIC range creating a halfway house between FPGAs and ASIC development, while Xilinx have their Versal ACAP product that brings together elements of FPGA, GPU and CPU processing on a single die.

At the low power, low-cost end of the market, Lattice and Microchip seem to be really driving into the hobbyist / maker end of the market. Lattice have acknowledged the value of the moves toward open source tooling in helping this.

Open Source and ‘Hobbyist’ Development Boards Becoming Popular

There has been significant development in the open-source space. On the hardware side, businesses such as Patreon and Kickstarter are helping to facilitate a number of open-source hardware, and FPGA projects – again, lowering the barrier to entry to this type of technology.

On the toolchain side, Symbiflow looks like the leading contender to bring more people into the ecosystem. Their goal of creating ‘the GCC of FPGAs’, and providing an opensource toolchain compatible with multiple vendors is a world away from being tied to specific (and expensive) vendor tooling.

Even the vendors are beginning to release more code to the community: Xilinx currently have over two hundred GitHub repositories, and have open-sourced significant portions of their High Level Synthesis toolchain, Vitis – in an attempt to encourage greater adoption (more on this below).

High Level Synthesis (HLS) accelerates rapid prototyping, but potentially introduces security risk

This author believes that we are fast approaching if not already past the point where FPGAs are used regularly in a ‘drag and drop’ design flow. This is being facilitated by significant investments in high level synthesis tooling, which allows developers to develop FPGA designs, without necessarily having to have a deep familiarity with the underlying register-transfer level (RTL).

Xilinx have made significant investments into their Vitis HLS toolchain, including, opening it up for open-source collaboration; and there are a number of other projects in development that allow the rapid prototyping and conversion to FPGA of algorithms developed in other languages, (e.g. Java, C++, OpenCL). Other projects are looking to automatically abstract machine learning algorithms such as TensorFlow, Pytorch etc to RTL.

It is also worth mentioning, an alternative approach – the development of new languages: one of which is the Chisel project that adds hardware primitives to the Scala programming language. While this technically isn’t a high level synthesis tool, in the sense of some of the projects mentioned above – the aims are similar: to provide a better way for designers to produce rich logic designs while abstracting away low-level details.

In some ways, this is analogous to the development of computer programming languages. In the early years, a limited number of low-level languages were used (e.g. Assembly, C, Pascal, ADA – remind anyone of VHDL?). Today, a plethora of high-level languages, libraries, and frameworks allow developers of all skillsets and backgrounds to create complex systems.

However, as with modern software development, it could lead to increased potential for security issues around data processing (for example, mass assignment – where extra data fields are passed around unnecessarily for developer convenience), issues around endianness and line feeds, or in some cases to vulnerabilities exploiting memory leaks around dynamic memory allocation.

It will be interesting to assess the quality of the output of some of these tools and highlight any potential issues these may create. Vulnerabilities in the output of popular tools are likely to be replicated across a wide range of products and applications.

For example, in a typical state-machine design, an RTL designer will carefully define the underlying registers and memories to store data, provide appropriate assignments to sanitize the data at reset, and on various changes of state. They will also take great care to ensure that the system fails safe. The individual components will be carefully verified via testbenches, and in specific high-risk areas of code, formal verification may be used to mathematically validate the design.

It remains to be seen whether the myriad of tools and methods now becoming available, will be capable of generating RTL to these same rigorous standards. Hopefully it will lead to improvements similar to those seen from the migration away from memory-unsafe languages such as C, to memory-safe languages such as Python.

A Move Toward Open Source Hardware

Since 2016, there has been a proliferation of open-source intellectual property (IP). Whereas historically companies would jealously guard their IP – now there is a much wider recognition within the community that open source has huge value to offer the hardware or embedded community.

The best example of this is the RISC-V project, an open instruction set architecture (ISA) – designed to bring the benefits of open source and open standards to software and hardware architecture. In the security space, RISC-V has previously run a competition encouraging participants to design processors that can thwart malicious software-based attacks. RISC-V has also been used as a test-bed to prove out novel hardware security technologies like CHERI. This kind of openness could have profound implications for people’s trust and faith in the traditional closed processor IP model.

Lattice have been collaborating on RISC-V for a while, Microchip claim to have released the first RISC-V SoC FPGA development kit, while Intel have just (October 2021) released a version of their popular NIOS soft processor based on RISC-V.

Recent Vulnerabilities in FPGAs

Having set the scene, we turn to some well-publicized vulnerabilities related to FPGAs to get a better idea of where the state-of-the-security-art currently lies within this space.

Starbleed: A Full Break of the Bitstream Encryption of Xilinx 7-Series FPGAs

Before introducing the following vulnerability, it is important to define bitfile, or bitstream. This is, the image that gets loaded onto the FPGA to tell it what to do, and can be thought of as analogous with a compiled computer program.

Typically, FPGA vendors support bitstream encryption to allow OEMs (who enable this feature) to protect the contents of the bitstream which is typically considered to be sensitive intellectual property (IP).

The Starbleed (2020) vulnerability, detailed in The Unpatchable Silicon: A Full Break of the Bitstream Encryption of Xilinx 7-Series FPGAs, breaks both the authenticity and confidentiality of bitstream encryption. This allows an attacker to:

- create their own bitfile masquerading as a legitimate bitstream

- reverse engineer the legitimate bitstream, and potentially identify vulnerabilities

Xilinx acknowledged the issue in a design advisory in which they state:

“The complexity of this attack is similar to well known, and proven, Differential Power Analysis (DPA) attacks against these devices and therefore do not weaken their security posture”.

This author’s opinion is that the attack presented in the paper is more straightforward to carry out from a technical perspective, and has a lower material cost overhead than DPA. It should therefore be taken seriously by designers, and the risks mitigated accordingly.

It’s also worth noting that although the barrier to entry for power analysis attacks against the Xilinx FPGAs in this instance probably remains relatively high from a material cost perspective; in the general case this is changing rapidly with tools like ChipWhisperer that are lowering the barrier to entry for such attacks. Indeed, an FPGA is available as a target example for training purposes.

Returning to the original Starbleed paper, the authors introduce:

novel low-cost attacks against the Xilinx 7-Series (and Virtex-6) bitstream encryption, resulting in the total loss of authenticity and confidentiality

There are some pre-requisites for the attack, namely:

- Access to a configuration interface on the FPGA (either JTAG or SelectMap)

- Access to the encrypted bitstream

Access to these two items may not be trivial, if security has been considered in the design of the product.

The JTAG or SelectMap interfaces may not be connected to an external processor, in which case physical access to the device may be required.

If they are connected to an external processor (or indeed, that processor is responsible for loading the encrypted bitstream onto the FPGA), then there should be multiple protections (including a carefully threat modelled minimised attack surface and application of principles of least privilege for any externally facing services) in place to reduce the risk of attack.

That said, both of these goals are very achievable to a well-resourced and motivated attacker with physical access to one or more devices.

Let’s now unpack the individual attacks themselves:

Attack 1: Breaking confidentiality

Researchers took advantage of various features of the FPGA but particularly: the malleability of Cipher Block Chaining (CBC) mode of AES being used by Xilinx, and a register (WBSTAR) in the FPGA that is not reset during a warm boot.

Put (very) simply: given the structure of the bitstream is a known quantity, it is possible to manipulate the bitstream to effectively trick the FPGA into placing one word of correctly decrypted data into the WBSTAR register. If this process is repeated multiple times, the entire contents of the bitstream are revealed. It is well worth reading the full paper to understand how this works in detail.

Even with the unencrypted bitstream recovered; reverse engineering a bitstream is not trivial. Mature tools such as IDA and Ghidra that make this substantially easier in software, currently projects such as HAL and Binary Abstraction Layer are in a much earlier stage of development.

Attack 2: Breaking authenticity

Given the above, the researchers were then able to use padding oracle attacks such as those described by Rizzo and Duong, to use the FPGA to encrypt a bitstream of their choice. It is possible to reproduce the HMAC tag at the end of the stream, as the HMAC key is part of the encrypted bitstream (already broken)!

There are various impacts of these vulnerabilities: as mentioned earlier, reverse engineering may lead to further vulnerabilities being identified in a specific product. Breaking authenticity raises the prospect of things like cloning of hardware devices, or bitstream tampering – for example, introducing malicious logic or a hardware trojan.

Thangrycat (AKA Cisco Secure Boot Hardware Tampering Vulnerability)

The Thangrycat vulnerability, was found in 2019 by researchers investigating the secure boot process used in Cisco routers.

It exploits flaws in the design of the Secure Boot process, in which an FPGA forms the Cisco Trust Anchor Module (TAm). The vulnerability leads to the complete breakdown of the Secure Boot process, which could lead to the ability to inject persistent malicious implants into both the FPGA and software.

Fundamentally, this attack stems from the Cisco Secure Boot process using a mutable FPGA as the root-of-trust, rather than a more traditional immutable trust anchor.

The attack is facilitated by a command injection vulnerability in the software that enabled remote, root shell access to the device – and a firmware update utility that does not perform any authentication of the supplied FPGA bitstream.

This indicates the importance of a defense-in-depth approach to security, as it is the chain of vulnerabilities, that ultimately leads to the persistent remote compromise of the device.

Xilinx ZU+ Encrypt Only Secure Boot Bypass

Another FPGA vulnerability discovered in 2019, impacts Xilinx’s latest Ultrascale devices and exploits issues in the “encrypt only” root of trust implementation – which allows secure boot without asymmetric authentication.

Ultimately, a failure to authenticate the boot header, allows an attacker to potentially tamper with the first-stage bootloader (FSBL) execution address – causing undetermined behaviour, or potentially bypassing Secure Boot with the execution of the attacker’s own controlled code.

Presumably this issue is – or will be – resolved in later silicon, but as yet Xilinx “continues to recommend the use of the Hardware Root of Trust (HWRoT) boot mode when possible“.

JackHammer: Efficient Rowhammer on Heterogeneous FPGA-CPU Platforms

Jackhammer (2019) isn’t technically a vulnerability of an FPGA – but rather a method of using an FPGA to ‘upgrade’ the well-documented Rowhammer vulnerability. In short, it uses the performance and direct path to memory that the FPGA has to abuse the DRAM more quickly.

This raises two interesting thinking points:

- How adversaries might use FPGA to enhance or improve existing attacks. For example PCIleech, or creating more powerful bus interposers such as TPMGenie.

- How little visibility the operating system has over the behavior of an FPGA attached to the system

Defending Against Threats to FPGA Security

The temptation (beyond some sort of glaring logic error or design flaw), with many potential attacks against FPGAs is to overlook or dismiss them as ‘too hard’ or ‘too complicated’ to be of interest to an attacker. Alternatively, it may be tempting to think that having enabled ‘security features’ on the FPGA, that’s the job done.

So, as someone currently using, or looking at using FPGAs – given the vulnerabilities detailed above – what threats should you consider when reviewing your design?

Firstly, and as typical in many threat modelling scenarios, we start with the path of least resistance. Deploying physical attacks against an FPGA potentially has many obstacles – and risk of getting caught. The most likely attacks are those that can be facilitated remotely, and with minimal effort.

Attack the Source

If you are a high-value target, consider your weakest link. For example if you are running a high-frequency trading platform, it is likely your FPGAs are being constantly revised and redeveloped regularly. How much care is being taken around the security of that codebase? How well trained are your developers in good security practices? Do you have mitigations protecting from malicious insiders?

Could a phishing attack on a developer, leading to compromise of a company’s IT systems, lead to some sort of discreet trojan being embedded in your design?

Attack the Software

In many cases, the FPGA might sit behind, or be protected by various software security mechanisms. Those need to work and be maintained. Patching, constant evaluation of attack surface, and regular testing are key.

If these are broken, this could open a new avenue of attack: replacing the bitfile. If appropriate care hasn’t been taken in implementing secure boot as in the cases shown above, loading a malicious bitfile into a system could be trivial. No physical access, or reverse engineering required.

Physical Attacks

High value deployments need to be sensitive to physical attacks. For example, an HSM, with an FPGA-based SoC at its core, may be an attractive target for theft, followed by offline physical attack. For this reason, HSMs often deploy physical tamper detection mechanisms to erase key material if attempts are made to compromise the device via physical means.

In consumer-grade hardware: consider an ‘evil maid’ scenario, that perhaps is using an FPGA to provide a root-of-trust or some other key security feature: but the device could temporarily be in the hands of an untrusted person (a courier, a shop assistant, a warehouse worker). What would the impact be if an attacker replaced the device, or the bitstream with one that they controlled? Or placed their own interposer between the FPGA and other components in the system?

Do the interfaces between these key components perform any validation or authentication on the data they are receiving, or just implicitly trust it?

In this context it is worth reflecting on the example of malware called GrayFish developed by the Equation Group. This malware was able to achieve persistence (beyond even reformatting of the computer hard drive) and is very difficult to detect and remove, due to the lack of firmware signing protection. Are there any opportunities where this same type of attack could present itself on your system?

In lieu of fixing the design, attacks like this can be somewhat mitigated using measures such as RF shielding and potting (enclosing the components and PCB in some sort of compound like epoxy) making successful dismantling of the product far more challenging and time consuming.

Relying on Security Via Obscurity

The Starbleed vulnerability described earlier in this post leads to the disclosure of a plain text bitstream, which, with sufficient time and effort invested, could result in the reverse engineering of the IP and anything previously considered secret within. While this is currently a high effort operation, tooling such as HAL, Binary Abstraction Layer, and CHIPJUICE are making the reverse engineering of FPGA and ASIC netlists more accessible.

It is important to resist the temptation to see the FPGA as a complex opaque box that no-one would go to the effort of compromising, and instead recognise the decreasing level of effort that will be required to reverse engineer a complex bitstream in the not-so-distant future.

Consider your design again: but now, assume that bitstream confidentiality can be compromised. What is the worse-case scenario? Is it possible that an attack could identify hardcoded magic values that might activate development or debug functionality? Or even worse, hardcoded secrets that could facilitate privilege escalation?

Abuse of Functionality

We’ve seen earlier in this post that there is the possibility of abusing an FPGA to carry out a Rowhammer-style attack, with improved efficiency, and potentially doing so covertly.

If you are running an FPGA accelerator card on a system, what other data does that system have access to? Could the FPGA accelerator be abused or mishandled, to extract secrets from the host via DMA? Is this part of your threat model?

Are you on a cloud-based FPGA platform? Although at time of writing (November 2021), you likely don’t have to worry about remote side-channel attacks from others on the same FPGA, theseso-called ‘long wire’ attacks by others on the same FPGA could potentially be a scenario in future.

Implementation Errors

However your design is being generated, it is important to ensure adequate testing is in place to identify errors in the design.

For example, if your FPGA is processing data incoming from various sensors with different protocols (e.g. I2C, SPI), what happens if that data is invalid or goes out of the expected range? Can the design cope? Does it fail safe? These types of errors can be identified both by code review, and by fuzz testing.

Supply Chain Attacks

The potential for both software and hardware supply chain attacks appears to be gaining increased visibility. In Summer 2020, the UK government, as part of a drive to future-proof the digital economy, launched a call for views on cyber security in supply chains and managed service providers. In July this year, the European Union’s agency for Cybersecurity (ENISA) published a Threat Landscape for Supply Chain Attacks; and in the United States as part of the Biden administration’s executive order aimed to improve the country’s cyber resilience. Section 4 pertains specifically to “Enhancing Software Supply Chain Security”, and directed NIST to identify guidelines for improving security in this area.

Recent examples of in this space include:

- The software supply chain compromise of the SolarWinds Orion network monitoring software, in which a security product used by US government agencies, and many other big business was compromised – and legitimate updates supplied by SolarWinds were implanted with a software trojan which attackers could then use to access victim’s systems.

- Vulnerabilities identified in the Ledger Nano X mean that is possible for an attacker who has access to the device post-manufacture but prior to reaching the end-user to potentially install malicious firmware onto the devices, which could then infect or manipulate the end-user’s computer systems.

- Recent research by F-Secure on ‘Fake Cisco’ devices – whereby, genuine Cisco firmware, was found to run on counterfeit hardware. The clones in this instance, did not appear to contain any malicious backdoors – but to the average end-user who is either trying to source equipment in a rush, or perhaps cut-costs, these counterfeit devices may pass as genuine.

It is also worth noting that the controversial and still unproven, Bloomberg investigation into a possible supply chain attack on SuperMicro customers has (if nothing else), significantly raised the profile of this type of attack.

This recent Microsoft blog and presentation by Andrew “bunny” Huang deliver a lot more commentary on this threat and suggest some mitigations.

From the perspective of an OEM designing with FPGAs there are two elements to consider here:

- The physical supply chain: How can you be sure you getting authentic parts, that have not been tampered with? Counterfeiting is on the rise – as seen in the CISCO example above. Furthermore, with recent COVID-19 related chip shortages, experts are predicting a sharp rise in counterfeit components.

- The ‘soft’ supply chain: The trend towards abstracting away the specifics of the RTL design, with higher level languages and readily available and easy-to-integrate logic blocks (also known as intellectual property (IP) blocks), continues to accelerate – but as this accelerates the trust in the third-parties providing these erodes. Do you know what facilitates their security? Open-source projects are not immune from this. Recently, unsuccessful attempts were made to test the ability of the Linux Kernel project, to react to so-called ‘hypocrite commits’. These commits included a small patch to fix a minor issue, but then knowingly introduced a more critical issue (in this case, a use-after-free vulnerability) than the fixed one. The full story, is well worth reading.

Obsolesence

Ironically, an FPGA’s flexibility could lead to a lack of attention being paid to obsolescence.

Let’s set a scene:

Startup Company A creates device X (built around an FPGA), and enjoys widespread success, with millions of units shipped worldwide. After several years, a design flaw is discovered in the FPGA design within device X.

For example, let’s consider that the FPGA used in device X was a Cyclone II device. This is still available (28th July 2021) from popular electronic part vendors. Yet, the last supported version of the design tools that support this device date from July 2013 – before Windows 10 was even released! When attempting to download this software the user is presented with a warning:

This archived version does not include the latest functional and security updates. For a supported version of Quartus, upgrade to the latest version

which, inevitably, requires the purchase and migration of the design to new silicon.

Arguably this creates a false sense of security; the common (and false) belief that “we can fix that after it’s shipped”. But if you are reliant on the vendor maintaining the software, and your ability to iterate those designs is compromised – and so we must ask, how long does that statement hold true?

Worse still, you must somehow maintain an old, and unsupported version of Windows if you want to guarantee that the tooling will still be compatible. This can leave the rest of your IT infrastructure vulnerable.

As the recent WD My Book Live attacks have shown, manufacturers often find maintenance of old devices either too challenging or economically unappealing, a problem that is possibly more difficult with reconfigurable hardware. For more detailed discussion of the right to repair, and what happens at end-of-life, see Rob Wood’s recent blog post on this subject.

Side channel and fault injection attacks

Traditionally, side-channel and fault injection attacks have always required physical access to the device – thus, keeping a device in a physically secured environment is a reasonable mitigation. With the proliferation of cloud-based computing, however, that paradigm changes.

A number of research papers exist that discuss the potential problems of a multi-tenanted FPGA, and the ability of an attacker to perform a side-channel attack against other logic in the device. Another piece of research, DeepStrike demonstrates a remote injection attack on a DNN accelerator on an FPGA.

These attacks, it must be noted – all rely on the attacker having access to or being co-tenanted on the same FPGA as another user. At time of writing, while some providers allow multiple tenants on the same FPGA host system, it is not clear whether multiple tenants are permitted to occupy the same FPGA within a commercial deployment.

A more unique, and potentially relevant piece of research entitled C3APSULe, practically demonstrates how an FPGA can be used to establish a covert channel between FPGA-FPGA, FPGA-CPU and FPGA-GPU, when co-located on the same power supply unit. This opens other unique avenues of attack on cloud-hosted environments. Cloud providers mitigate some of these issues by blocking combinatorial loops.

The traditional approach (where the attacker has access to the physical device) is changing too. As mentioned above, tools like Chipwhisperer are bringing side-channel power analysis and fault injection attacks to new audiences.

My colleagues Jeremy Boone and Sultan Qasim Khan, provide a strong primer to fault injection attacks (and defenses), which, like differential power analysis, is becoming more accessible and more common.

Real-World Attack Scenarios

With any complex system, it is important to consider the systems’ threat model. FPGA-based systems are no different. By way of example, for the purpose of this blog post we consider two simplistic scenarios – and only consider the potential for remote attacks (i.e. without access to the physical hardware).

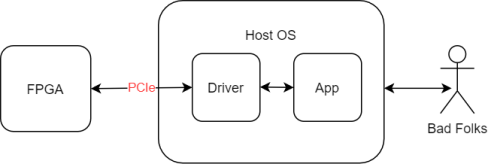

An FPGA used for compute offload

In this scenario, an FPGA is being used to offload compute effort from the host operating system (OS). For example, algorithmic calculations for a cryptocurrency. The FPGA is situated on a PCIe card, and communicates with the host application via a driver installed on the host OS.

In this scenario, the FPGA is relatively shielded from direct attack. As part of a defence-in-depth methodology, we would typically expect:

- The application presents appropriate access controls externally.

- The driver is acting as the ‘gatekeeper’ to the FPGA. It will likely have a series of specific calls that it exposes to the host operating system (and applications running on it) via its API – limiting direct access to the FPGA.

- The driver should perform extensive input validation on the data it receives from the application layer.

- The FPGA is expected to perform sufficient validation on inputs, and to fail safe in the event of unexpected data from the driver, or the PCIe interface.

- The host operating system to be appropriately hardened and secure. If this becomes compromised, then a logical assumption is that the FPGA will subsequently be compromised.

- Appropriate validation is being performed on the bitfile being loaded by the FPGA to ensure it is as expected.

- The FPGA workload/bitfile itself is not attacker controlled (if so, additional protections are needed to prevent the attacker from accessing the PCIe bus directly)

If any of the above fail to be met, the defence-in-depth approach becomes weaker – and the more gaps there are – then the lower the barrier to a complete compromise of the device. Depending on the application of the system, the results could range from the embarrassing (not being able to generate cryptocurrency) to the devasting (exfiltration or manipulation of completed hashes).

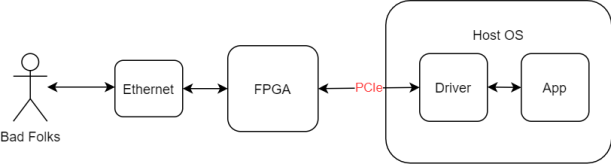

An FPGA used for pre-processing of network traffic

In this scenario, the FPGA is being used to perform pre-processing of network traffic prior to reaching the Host OS.

In this instance, the FPGA is the front-line of defence and we are effectively almost considering the reverse of the above.

- The FPGA will need to validate all traffic it receives from the Ethernet port.

- An in-house developed network stack will likely need substantial testing (including fuzzing) to validate its performance and security (formal verification may well be suitable in this scenario for identifying bugs).

- If third-party or open-source IP block is used, examine the types of testing that have been performed and assess the cyber security

- The driver is likely to implicitly trust the FPGA. Depending on the threat model of the system, this may need further validation efforts (for example, if an ‘evil maid’ or similar type of attack were a threat).

- The application will need to verify and validate the data received from the FPGA

- The host operating system to be appropriately hardened and secure. If this becomes compromised, then a logical assumption is that the FPGA will subsequently be compromised.

In this example, the FPGA really is in the frontline of the defence. The high level of testing and input validation that the FPGA needs to perform will underpin the integrity of the rest of the system. Equally however, those components too need to be robust in order to get that defence in depth.

Conclusion

If you’ve made it this far, hopefully this blog post has provided you a better picture of the current state-of-play of the FPGA market, and some of the security challenges associated with them. A variety of innovative vulnerabilities have been discussed – each with different degrees of severity, ease of exploitability, and in some cases device proximity required.

The key to understanding the risk that your product or company faces when using an FPGA (or indeed any system) should be to start by defining and then understanding a threat model of your product.

Is the FPGA in your system in front-line of the system handling untrusted data? Or is it at the backend, accelerating processing on a known quantity? Is it possible you could be the target of high-effort attacks such as side-channel attacks, or do you feel that the barrier to entry for that type of attack is sufficiently high? And, if so is it likely to remain sufficiently high in the future as and when hardware attacks become further commoditised?

With that said, it is also important not to forget the fundamentals. The lowest hanging fruit are still the easiest for an attacker to target: both major vendors at the low-cost end of their ranges still sell silicon that doesn’t include bitstream protection at all.