So What?

K3s shares a great deal with standard Kubernetes, but its lightweight implementation comes with some challenges and opportunities in the security sphere.

K3s

K3s is a lightweight implementation of the Kubernetes container orchestrator intended for workloads in "unattended, resource-constrained, remote locations or inside IoT devices" (https://k3s.io). Developed by Rancher Labs (recently acquired by SUSE), it has been adopted as a Cloud Native Computing Foundation sandbox project.

K3s clusters can be launched in seconds as the implementation reduces operational overhead significantly. This reduction is achieved by removing legacy and non-default features, alpha features, in-tree cloud providers, in-tree storage drivers, and Docker support (although any of these can be added in later as needed). This also shrinks the footprint of a Kubernetes install to a single golang binary of about 50MB in size.

The default installation includes:

- containerd runtime

- Flannel networking

- CoreDNS

- CNI support

- host utilities (iptables, socat, etc)

- Traefic ingress controller

- service load balancer

- network policy controller

- sqlite3 as default storage backend, instead of etcd3

From a security auditing perspective, these differences in implementation necessitate review approaches that differ from a standard Kubernetes review in four areas in particular:

- configuration manifests

- control plane components

- certificate-base authentication

- cluster state store

Configuration Manifests

Configuration manifests in K3s are typically stored at `/var/lib/rancher/k3s/server/manifests` and installed at runtime by Helm. This differs trivially from the Kubernetes `/etc/kubernetes/manifests` location for the control plane's static pods. K3s can also use a configuration file for installation parameters, stored by default at `/etc/rancher/k3s/config.yaml` (https://rancher.com/docs/k3s/latest/en/cluster- access/).

More importantly, K3s configuration manifests do not need to remain in this location for the control plane functions to operate. Just as in standard Kubernetes, a manifest file placed in `/var/lib/rancher/k3s/server/manifests` will automatically deploy to the cluster. Deleting a manifest file from this directory, however, will not interrupt the resource it defines in the cluster (https://rancher.com/docs/k3s/latest/en/advanced/). As a result, configuration manifests may be removed following installation, and it may not be possible to consult the configuration parameters for control plane components this way. This can complicate security audits and forensic investigations, as the manifests used to configure the cluster may no longer be present for review.

Unified Control Plane Components

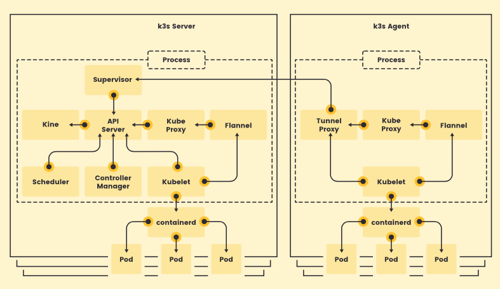

Another key way in which K3s differs from standard Kubernetes is in the bundling of the API server, Scheduler, Controller Manager, and state database processes into a single "Server" process. On worker nodes, the Kubelet, Flannel, and kube-proxy processes are bundled into a single "Agent" process. The Agent process communicates directly with its node's pods and connects to the Server process over tunnel proxy, which maintains a websockets tunnel for communication between the API Server and the Agent’s kubelet and containerd components (https://k3s.io)

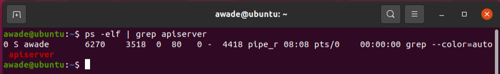

The result of this difference is that we can't just `ps -elf | grep ...` to get individual control plane process runtime settings.

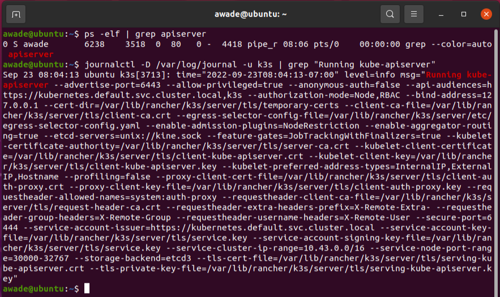

In the event that configuration manifests are also unavailable, it is necessary to gather configuration parameters by querying the systemd journal logs. To identify the journal log location, use `find / -name journal`. It is likely to be `/var/log/journal`, but is sometimes found at `/run/log/journal`. With that information, we can use journalctl to gather information equivalent to that gathered using `ps -elf | grep ...`.

For example:

- `journalctl -D /var/log/journal -u k3s | grep 'Running kube-apiserver'`

- `journalctl -D /var/log/journal -u k3s | grep 'Running kube-controller-manager'`

- `journalctl -D /var/log/journal -u k3s | grep 'Running kube-scheduler'`

Certificate-Based Authentication

Certificate-based authentication is not tragically broken in K3s, and does not bear the standard Kubernetes burdens of irrevocable 10-year certificate-based credentials. The K3s certificates have only a 12-month lifetime and are automatically rotated within 90 days of the end of that lifetime when the K3s server is restarted (https://rancher.com/docs/k3s/latest/en/advanced/). It is also possible to force early rotation (https://www.ibm.com/support/pages/node/6444205), reducing the threat that lost or stolen authentication certificates pose to the cluster.

Cluster State Store

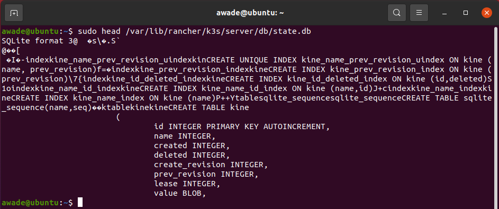

Standard Kubernetes uses the etcd key-value store to manage and maintain cluster state data and, while it can use etcd, K3s uses a sqlite database by default. The sqlite database can be found at `/var/lib/rancher/k3s/server/db` and does not have a client equivalent to etcdctl. Accessing the database requires an additional tool (e.g. DB Browser for SQLite - sqlitebrowser.org).

Summary

K3s offers some distinct advantages over standard Kubernetes in terms of size and authentication options, but requires a different approach to some security auditing practices in order to gather the necessary information from the cluster.

The folks at Rancher have also been kind enough to provide some K3s-specific CIS Benchmark tests:

* <https://rancher.com/docs/k3s/latest/en/security/self_assessment/>