I recently returned from Eindhoven, where I had the pleasure of giving a talk on some recent progress in isogeny-based cryptography at the SIAM Conference on Applied Algebraic Geometry (SIAM AG23). Firstly, I want to thank Tanja Lange, Krijn Reijnders and Monika Trimoska, who orgainsed the mini-symposium on the application of isogenies in cryptography, as well as the other speakers and attendees who made the week such a vibrant space for learning and collaborating.

As an overview, the SIAM Conference on Applied Algebraic Geometry is a biennial event which aims to collect together researchers from academia and industry to discuss new progress in their respective fields, which all fall under the beautiful world of algebraic geometry. Considering the breadth of algebraic geometry, it is maybe not so surprising that the conference is then filled with an eclectic mix of work, with mini-symposia dedicated to biology, coding theory, cryptography, data science, digital imaging, machine learning and robotics (and much more!).

In the world of cryptography, algebraic geometry appears most prominently in public-key cryptography, both constructively and in cryptanalysis. Currently in cryptography, the most widely applied and studied objects from algebraic geometry are elliptic curves. The simple, but generic group structure of an elliptic curve together with efficient arithmetic from particular curve models has made it the gold standard for Diffie-Hellman key exchanges and the protocols built on top of this. More recently, progress in the implementation of bilinear pairings on elliptic curves has given a new research direction for building protocols. For an overview of pairing-based cryptography, I have a blog post discussing how we estimate the security of these schemes, and my colleague Eric Schorn has a series of posts looking at the implementation of pairing-based cryptography in Haskell and Rust.

Despite the success of elliptic curve cryptography, Shor’s quantum polynomial time algorithm to solve the discrete logarithm problem in abelian groups means a working, “large-enough”, quantum computer threatens to break most of the protocols which underpin modern cryptography. This devastating attack has led to the search for efficient, quantum-safe cryptography to replace the algorithms currently in use. Mathematicians and cryptographers have been searching for new cryptographically hard problems and building protocols from these, and algebraic geometry has again been a gold mine for new ideas. Our group effort since Shor’s paper in 1995 has lead to exciting progress in areas such as multivariate, code-based, and my personal favourite, isogeny-based cryptography.

The study of post-quantum cryptography was the focus of many of the cryptographic talks over the course of the week, although the context and presentation of these problems was still very diverse. Zooming out, SIAM collectively organised 128+ sessions and 10 plenary talks; a full list of the program is available online. With a diverse group of people and a wide range of topics, the idea was not to attend everything (this is physically impossible for those who cannot split themselves into ~fourteen sentient pieces), but rather pick our own adventure from the program.

For the cryptographers who visited Eindhoven, there were three main symposia, which ran through the week without collisions:

- Applications of Algebraic Geometry to Post-Quantum Cryptology.

- Elliptic Curves and Pairings in Cryptography.

- Applications of Isogenies in Cryptography.

Additional cryptography talks were in the single session “Advances in Code-Based Signatures”, which ran concurrently with the pairing talks on the Wednesday.

For those interested in a short summary of many of the talks at SIAM, Luca De Feo wrote a blog post about his experience of the conference which is available on Elliptic News. As a compliment to what has then already been written, the aim of this blogpost is to give a general impression of what people are thinking about and the research which is currently ongoing.

In particular, the goal of this post is to summarize and give context to two of the main research focuses in isogeny-based cryptography which were talked about during the week. On one side, there is a deluge in new protocols being put forward which study isogenies between abelian varieties, generalising away from dimension one isogenies between elliptic curves. On the other side, the isogeny-based digital signature algorithm, SQIsign, has recently been submitted to the recent call for new quantum safe signatures by NIST. Many talks through the week focused on algorithmic and parameter improvements to aid in the submission process.

What is an isogeny?

For those less familiar with isogenies, a very rough way to start thinking about isogeny-based cryptography can be understood as long as you have a good idea of how it feels to get lost, even when you know where you’re supposed to be going. Essentially, you can take a twisting and turning walk by using an “isogeny” to step from one elliptic curve to another. If I tell you where I started and where I end up, it seems very difficult for someone else to determine exactly the path I took to get there. In this way, our cryptographic secret is our path and our public information is the final curve at the end of this long walk.

Not only does this problem seem difficult, it also seems equally difficult for both classical and quantum computers, which makes it an ideal candidate for the building block of new protocols which aim to be quantum-safe. For some more context on the search for protocols in a “post-quantum” world, Thomas Pornin wrote an overview at the closing of round three of the NIST post-quantum project which ended about a year ago at the time of writing.

A little more specifically, for those interested, an isogeny is a special map which respects both the geometric idea of elliptic curves (it maps some projective curve to another), but also the algebraic group structure which cryptographers hold so dear (mapping the sum of points is the same as the sum of the individually mapped points). Concretely, an isogeny is some (non-constant) rational map which maps the identity on one curve to the identity of another.

Isogenies in Higher Dimensions

For the past year, isogeny-based cryptography has undergone a revolution after a series of papers appeared which broke the key exchange protocol SIDH. The practical breakage of SIDH was particularly spectacular as it essentially removed the key-exchange mechanism, SIKE, from the NIST post-quantum project; which only weeks before had been chosen by NIST to continue to the fourth round as a prospective alternative candidate to Kyber.

For more information on the break of SIDH, I have a post on the SageMath implementation of the first attack, as well as a summary of the Eurocrypt 2023 conference, where the three attack papers were presented in the best-paper award plenary talks. Thomas Decru, one of the authors of the first attack paper, wrote a fantastic blog post which is a great overview of how the attack works.

The key to all of the attacks was that given some special data, information about the secret walk between elliptic curves could be recovered by computing an isogeny in “higher dimension”. In fact, the short description about isogenies was a little to restrictive. For the past ten years, cryptographers have been looking at how to compute isogenies between supersingular elliptic curves. However, over the fence in maths world, a generalisation of this idea is to look at isogenies between principally polarised superspecial abelian varieties. When we talk about these superspecial abelian varieties, a natural way to categorise them is by their “genus”, or “dimension”.

Luckily, for now, we don’t need to worry about arbitrary dimension, as for the current work we really only need dimension two for the attack on SIDH, and for some new proposed schemes, dimensions four and eight, which I won’t discuss much further.

If you want to imagine these higher dimensional varieties, one way is to think about three dimensional surfaces which have some “holes” or “handles”. A dimension one variety is an elliptic curve, which you can imagine as a donut. In dimension two we have two options, the generic object is some surface with two handles (a donut with two holes? Where’s all my donut gone?), but there are also “products of elliptic curves”, which can be seen as two dimensional surfaces which can in some sense be factored into two dimension-one surfaces (or abstractly, as a pair of donuts!).

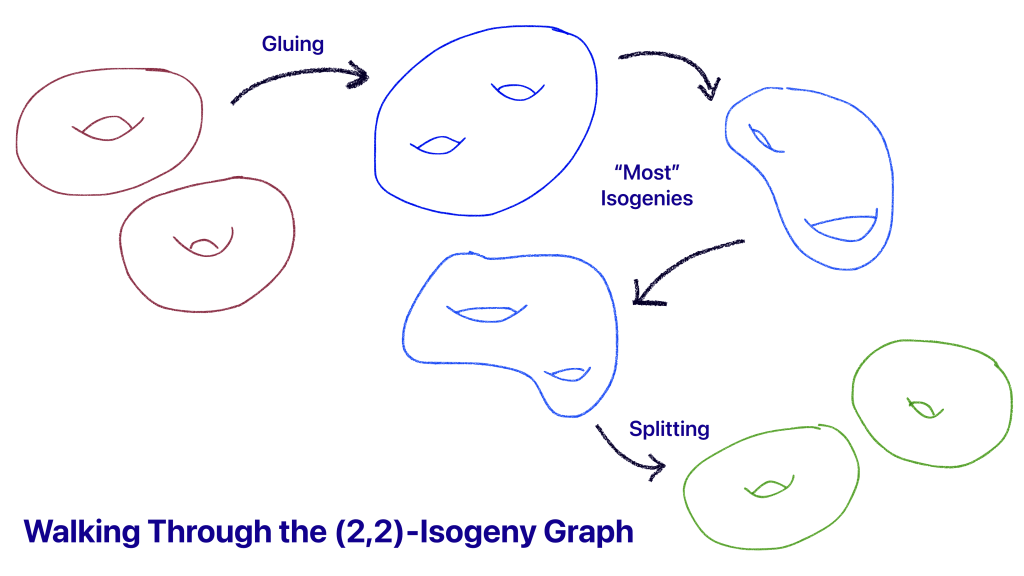

The core computation of the attack is a two-dimensional iosgeny between elliptic products. An isogeny between elliptic products is simply a walk which takes you from one of these pairs of donuts, through many many steps of the generic surface and ends on another special surface which factors into donuts again. A natural question to ask is, how special are these products? When we work in a finite field with characteristic p, we have about p^3 surfaces available and only p^2 of these are elliptic products. In cryptographic contexts, where the characteristic is usually very very large, it’s essentially impossible to accidentally land on one of these products.

With this as background, we can now ask a few natural questions:

- When can we compute isogenies between elliptic products?

- Why do we want to compute isogenies between elliptic products?

- How can we ask computers to compute isogenies between elliptic products?

Understanding when we find these very special isogenies between elliptic products was categorised by Ernst Kani in 1997, and it was this lemma which illuminated the method to attack SIDH. Kani’s criterion described how when a set of one dimensional isogenies has particular properties, and when you additionally know certain information about the points on these curves, you would find that your specially chosen two dimensional isogeny would walk between elliptic products.

This is what Thomas Decru talked about in his presentation, which gave a wonderful overview of why these criteria were enough to successfully break SIDH. The idea is that although some of this information is secret, you can guess small parts of the secret and when you are correct, your two dimensional isogeny splits at the end. Guessing each part of the secret in turn then very quickly recovers the entire secret.

Following the description of the death of SIKE, Tako Boris Fouotsa talked about possible ways to modify the SIDH protocol to revive it. The general idea is to hide parts of the information Kani’s criterion required to in such a way that an attacker can no longer guess it piece by piece. One method is to take the information you need from the points on curves and mask it by multiplying them by some secret scalar.

Masking these points, which are the torsion data for the curves, was also the topic of two other talks. Guido Lido gave an energetic and enjoyable double-talk on the “level structure” of elliptic curves, which was complimented very nicely by a talk by Luca De Feo the following day which gave another perspective on how modular curves can help us complete the zoology of these torsion structures. Along with this categorisation, Luca gave a preview of a novel attack on one possible variant of SIDH which hides half of the torsion data. If the SIDH is to be dragged back into protocols with the strategies discussed by Boris, it’s vital to really understand mathematically what this masking is, highlighting the importance of the work by Guido, Luca and their collaborators.

Although breaking a well-known and long standing cryptographic protocol is more than enough motivation to study these isogenies, the continued research on computing higher dimensional isogenies will be motivated by the introduction of these maps into protocols themselves. This brings us to the why, and this was addressed by Benjamin Wesolowski, who discussed SQIsignHD and Luciano Maino, who discussed FESTA. As SQIsign and related talks will soon have a section of its own, we’ll jump straight to FESTA.

The essence of FESTA is to find a way to configure some one dimensional isogenies during keygen and encryption such that during decryption, a holder of the secret key can perform the SIDH attack, while no one else can. As the SIDH attack describes secrets about the one dimensional isogenies, encryption is then a case of using some message to describe the isogeny path and as decryption recovers this path it also recovers the secret message. The core of how FESTA works is tied up in categories of masking, as Guido and Luca described. Luciano used his presentation to give an overview of how everything comes together, and how by using commutative masking matrices, encryption masks away certain critical data, and then during decryption the masking can be removed due thanks to the commutativity.

The idea of using SIDH attacks to build a quantum-safe public key encryption protocol is not new. In SETA, a very similar protocol was described. However, due to the inefficiencies of the SIDH attacks at the time, the protocol itself did not have practical running times. The key to what makes FESTA efficient is precisely the new polynomial time algorithms for the attack.

To close out the third session of the isogeny session, I then did my best to try and talk about the how. Given the motivation that these isogenies can be used constructively to build quantum-safe protocols, can we find ways to strip back the complications in existing implementations and get something efficient and simple enough so it appears suitable for cryptographic protocols. The talk was split between the three categories of isogenies we need:

- The first step is understanding how to compute the “gluing isogeny”, between a product of elliptic curves and the resulting dimension-two surface.

- The last step is understanding how to efficiently compute the “splitting isogeny” from a two-dimensional surface to a pair of elliptic curves.

- All other steps are then isogenies between these generic two dimensional surfaces. These are described by the Richelot correspondence, which date back to the 19th century and are surprisingly simple considering the work they do.

I described some new results which allow for particularly efficient gluing isogenies, and that working algebraically, a closed form of the splitting isogeny can be recovered, saving about 90% of the work of the usual methods. For the middle steps, there’s still much work to be done and I hope as a community we can continue optimising these isogenies.

In summary, the SIDH attacks have introduced a whole new toolbox of isogenies and it’s exciting to see these being used constructively and optimised for real-world usage. The cryptanalysis on isogenies based protocols of course has it’s own revolution and understanding how higher dimensional structures can make or break new schemes is vibrant and exciting work.

An Isogeny Walk back to NIST

Back in dimension one, an isogeny-based digital signature algorithm has been submitted to NIST’s recent call for protocols. Of the 40 candidates which appeared with round one coming online, only one is isogeny-based. SQIsign is an extremely compact, but relatively new and slow protocol which was introduced in 2020 and was followed up with a paper with various performance enhancements in 2022.

Underlying SQIsign is a fairly simple idea. The signer has computes a secret isogeny path between two elliptic curves. The starting curve, which is public and known to everyone, has special properties. The signer publishes their ending curve as a public key, but as only they know the isogeny between the curve, only the signer knows special properties of the ending curve. A signature is computed from a high-soundness sigma protocol, which essentially boils down to asking the signer to compute something which they could only know if they know this secret isogeny.

Concretely, SQIsign is built on the knowledge of the endomorphism ring of an elliptic curve, which is the set of isogenies from a curve to itself. The starting curve is chosen so everyone knows its endomorphism ring. The trick in SQIsign is that although generally it seems hard to compute the endomorphism ring of a random supersingular curve, if you know an isogeny between two curves and the endomorphism ring of one of them, you can efficiently compute the endomorphism ring of the other. This means that the secret isogeny allows the signer to “transport” the endomorphism ring from the starting curve to their public curve thanks to their secret isogeny and so the endomorphism ring of this end curve is secret to everyone except the signer.

Algorithms become efficient thanks to the Deuring correspondence, which takes information from an elliptic curve and represents it using a quaternion algebra. In quaternion world, certain problems become easy which are hard on elliptic curves, and once the right information is recovered, the Deuring correspondence maps this all back to elliptic curve world so the protocol can continue. Ultimately all of the above boils down to “things are computationally easy if you know the endomorphism ring”. Because of this, as signer can compute things from the public curve which nobody else can feasibly do.

There’s a lot of buzzwords in the above, and unpicking exactly how SQIsign works is challenging. For the interested reader, I recommend the above papers, along with Antonin Leroux’s thesis. For those who like to learn along with the implementation, I worked with some collaborators to write a verbose implementation following the first SQIsign paper in SageMath. A blog discussing implementation challenges was written: Learning to SQI and the code is on GitHub.

The selling point of SQIsign is its compact representation. For NIST level I security (128-bit), a public key requires only 64 bytes and a signature only 177 bytes. Compare this to Dilithium, a lattice based scheme chosen at the end of round three, which at the same security level has public keys with 1312 bytes and signatures of 2420 bytes! However, the main drawback is that it’s magnitudes slower than Dilithium, and the complex, heuristic algorithms of some of the quaternion algebra pieces means that writing a safe and side-channel resistant implementation is extremely challenging.

At SIAM, progress in closing the efficiency gap was the subject of several talks, and optimisations are being found in a variety of ways. Lorenz Panny discussed the Deuring correspondence in a more general setting, where he showed that with some clever algorithmic tricks, isogenies could be computed in reasonable time by using extension fields to gather enough data for the Deuring correspondence to be feasible, even for inefficient parameter sets.

On the flip side of this, Michael Meyer discussed recent advances in parameter searching for SQIsign, which makes the work that Lorenz described particularly efficient. One of the main bottlenecks in SQIsign is in computing large prime degree isogenies, which occurs because SQIsign requires the characteristic p to both have p+1 and p-1 to have many small factors and for large p, it’s tough to ensure all these factors stay as small as possible. Michael discussed several different tricks which can be used to find twin smooth numbers and how different techniques are beneficial depending on the size of bit-length of p. The upshot is the culmination of all the ideas has allowed the SQIsign team to find valid parameter sets targeting all three NIST security levels.

Antonin Leroux talked more specifically about the Deuring correspondence as used in the context of SQIsign and focused on the improvements between the 2020 and 2022 SQIsign papers. The takeaway was that several improvements have resulted in performance enhancements to allow up to NIST-V parameter sets, but the protocol was a long way off competing with the lattice protocols which had already been picked. Optimistically, we can always work hard to find faster ways to do mathematics, and the compact keys and signatures of SQIsign make it extremely attractive for certain use cases.

To finish the summary, we can come back to Benjamin Wesolowski’s talk, which described recent research which adopts the progress in higher dimensions and modifies the SQIsign protocol, removing many heuristic and complicated steps during keygen and signing and shifts the protocol’s complexity into verification.

The main selling point of SQIsignHD is that it is not only simpler to implement in many ways, but the security proofs become much more straight forward, which should go a long way to show that the protocol is robust. However, unlike the original SQIsign, SQIsignHD verification requires the computation of a four dimensional isogeny. These isogenies are theoretically described, but a full implementation of these is still a work in progress. Understanding precisely how the verification time is affected is key to understanding whether the HD-remake of SQIsign could either replace of exist along side of the original description.

Acknowledgements

Many thanks to Aleksander Kircanski for reading an earlier draft of this blog post, and to all the people I worked with during the week in Eindhoven.